High Visibility Problems with NYT, data.gov Show Need for Better

Practices

When I say, “shot”, what do you think of? A flu shot? A shot of whisky? A moon shot? A gun shot? What if I add the term “bank”? Do you now think of someone being shot in an armed robbery of a local bank or similar?

And, now, what if I add a reference to say, The Hustler, or Minnesota Fats, or “Fast Eddie” Felson? Do you now see the connection to a pressure-packed banked pool shot in some smoky bar room?

As humans we need context to make connections and remove ambiguity. For machines, with their limited reasoning and inference engines, context and accurate connections are even more important.

Over the past few weeks we have seen announcements of two large and high-visibility linked data

projects: One, a first release of references for articles concerning about 5,000 people from the New York Times at data.nytimes.com; and Two, a massive exposure of 5 billion triples from data.gov datasets provided by the Tetherless World Constellation (TWC) at Rennselaer Polytechnic Institute (RPI).

On various grounds from licensing to data characterization and to creating linked data for its own sake, some prominent commentators have weighed in on what is good and what is not so good with these datasets. One of us, Mike, commented about a week ago that “we have now moved beyond ‘proof of concept’ to

the need for actual useful data of trustworthy provenance and proper mapping and characterization. Recent efforts are a disappointment that no enterprise would or could rely upon.”

Reactions to that posting and continued discussion on various mailing lists warrant a more precise dissection of what is wrong and still needs to be done with these datasets [1].

Berners-Lee’s Four Linked Data “Rules”

It is useful, then, to return to first principles, namely the original four “rules” posed by Tim Berners-Lee in his design note on linked data [2]:

- Use URIs as names for things

- Use HTTP URIs so that people can look up those names

- When someone looks up a URI, provide useful information, using thestandards (RDF, SPARQL)

- Include links to other URIs so that they can discover more things.

The first two rules are definitional to the idea of linked data. They cement the basis of linked data in the Web, and are not at issue with either of the two linked data projects that are the subject of this posting.

However, it is the lack of specifics and guidance in the last two rules where the breakdowns occur. Both the NYT and the RPI datasets suffer from a lack of “providing useful information” (Rule #3). And, the nature of the links in Rule #4 is a real problem for the NYT dataset.

What Constitutes “Useful Information”?

The Wikipedia entry on linked data expands on “useful information” by augmenting the original rule with the parenthetical clause, ” (i.e., a structured description — metadata).” But even that expansion is insufficient.

Fundamentally, what are we talking about with linked data? Well, we are talking about instances that are characterized by one or more attributes. Those instances exist within contexts of various natures. And, those contexts may relate to other existing contexts.

We can break this problem description down into three parts:

- A vocabulary that defines the nature of the instances and their descriptive attributes

- A schema of some nature that describes the structural relationships amongst instances and their characteristics, and, optimally,

- A mapping to existing external schema or constructs that help place the data into context.

At minimum, ANY dataset exposed as linked data needs to be described by a vocabulary. Both the NYT and RPI datasets fail on this score, as we elaborate below. Better practice is to also provide a schema of relationships in which to embed each instance record. And, best practice is to also map those structures to external schema.

Lacking this “useful information”, especially a defining vocabulary, we cannot begin to understand whether our instances deal with drinks, bank robberies or pool shots. This lack, in essence, makes the information worthless, even though available via URL.

The data.gov (RPI) Case

With the support of NSF and various grant funding, RPI has set up the

Data-Gov Wiki [3], which is in the process of converting the datasets on data.gov to RDF,placing them into a semantic wiki to enable comment and annotation, and providing that data as RSS feeds. Other demos are also being placed on the site.

As of the date of this posting, the site had a catalog of 116 datasets from the 800 or so available on data.gov, leading to these statistics:

- 459,412,419 table entries

- 5,074,932,510 triples, and

- 7,564 properties (or attributes).

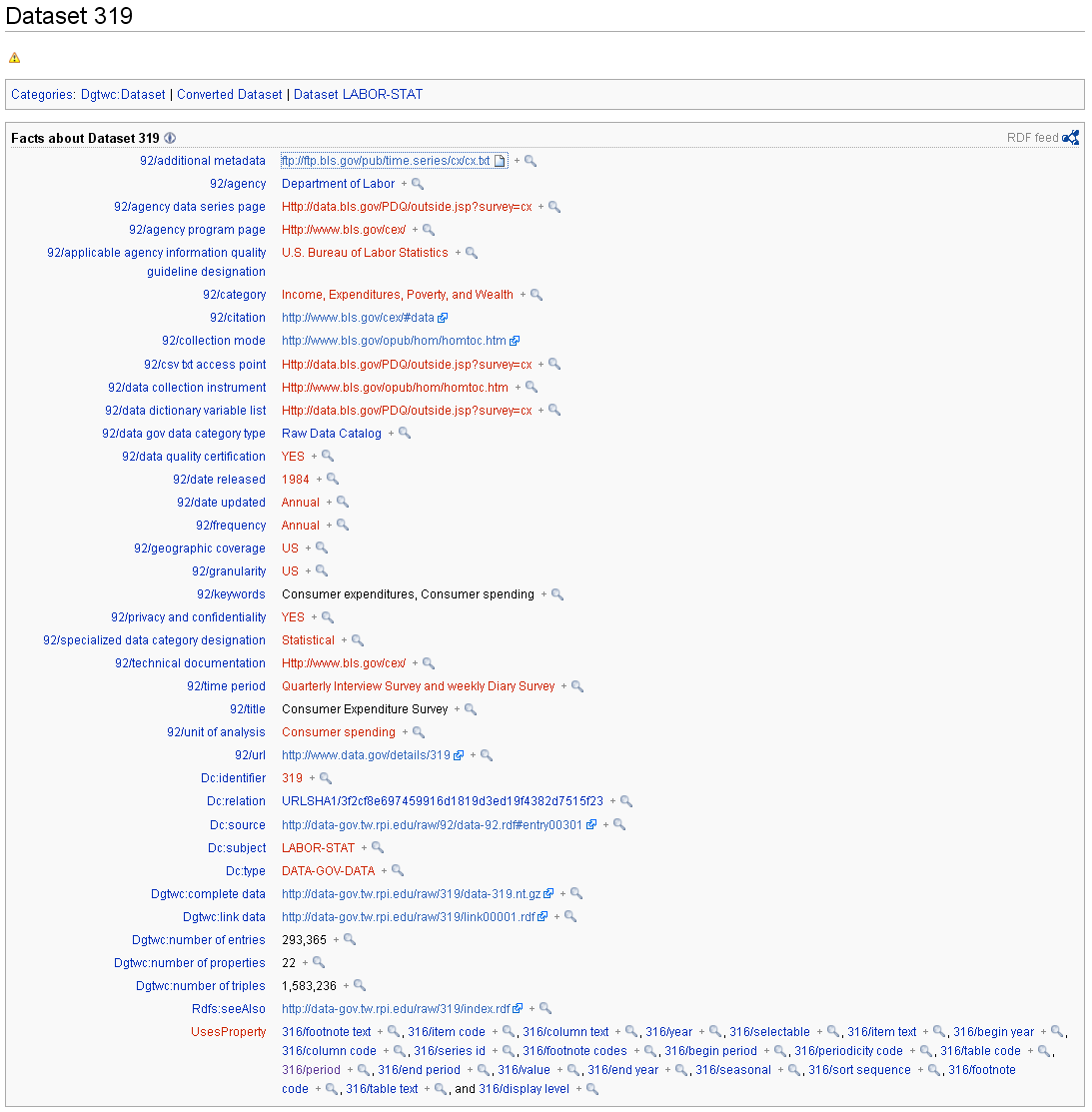

We’ll take one of these datasets, #319, and look a bit closer at it:

| Wiki | Title | Agency | Name | data.gov Link | No Properties | No Triples | RDF File |

|---|---|---|---|---|---|---|---|

| Dataset 319 | Consumer Expenditure Survey | Department of Labor | LABOR-STAT | http://www.data.gov/details/319 | 22 | 1,583,236 | http://data-gov.tw.rpi.edu/raw/319/index.rdf |

This report was picked solely because it had a small number of attributes (properties), and is thus easier to screen capture. The summary report on the wiki is shown by this page:

So, we see that this specific dataset contains about 22 of the nearly 8,000 attributes across all datasets.

When we click on one of these attribute names, we are then taken to a specific wiki page that only reiterates its label. There is no definition or explanation.

When we inspect this page further we see that, other than the broad characterization of the dataset itself (the bulk of the page), we see at the bottom 22 undefined attributes with labels such as item code, periodicity code, seasonal, and the like. These attributes are the real structural basis for the data in this dataset.

But, what does all of this mean???

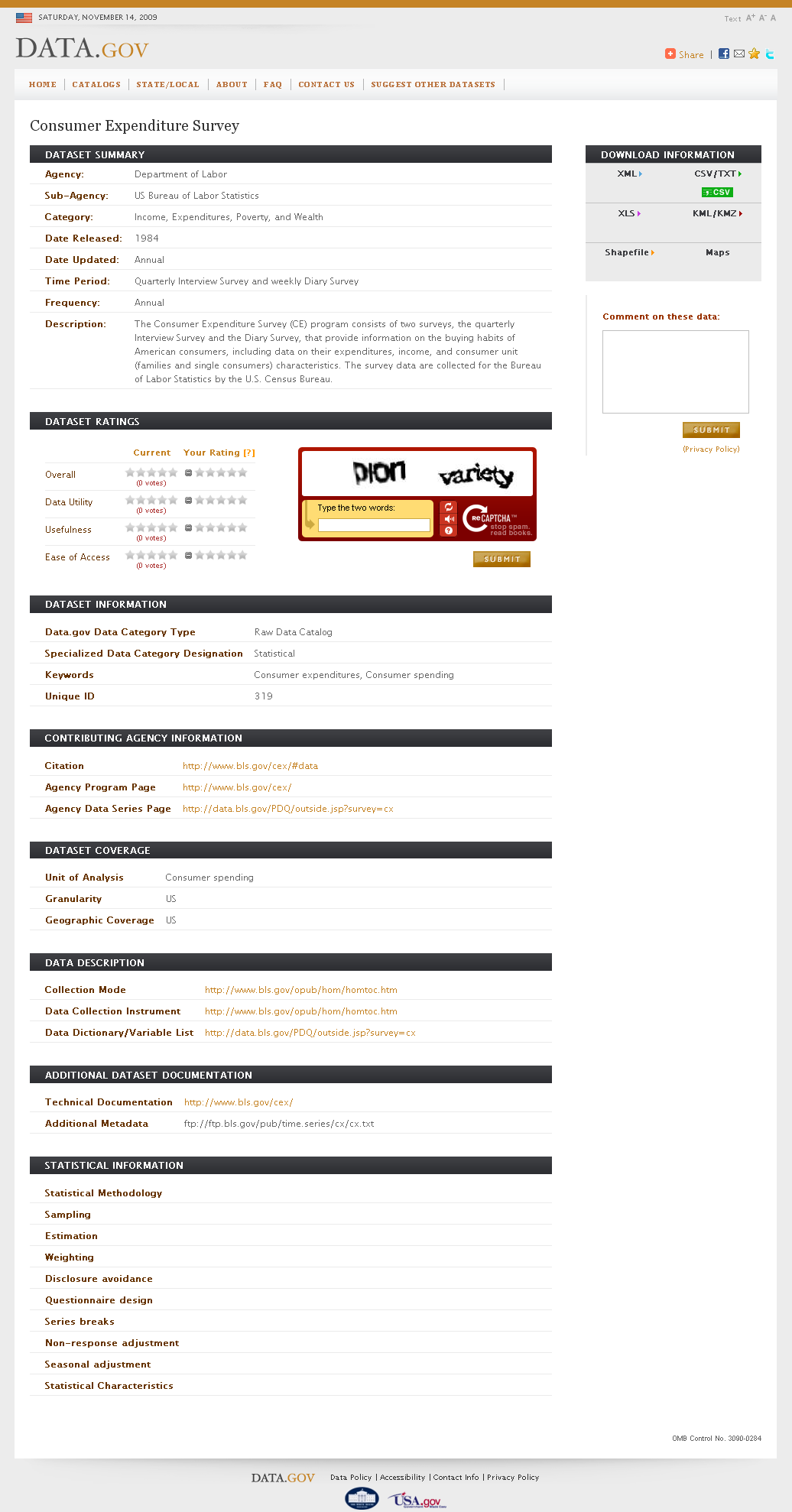

To gain a clue, now let’s go to the source data.gov site for this dataset (#319). Here is how that report looks:

Contained within this report we see a listing for additional metadata. This link tells us about the various data fields contained in this dataset; we see many of these attributes are “codes” to various data categories.

Probing further into the dataset’s technical documentation, we see that there is indeed a rich structure underneath this report, again provided

via various code lookups. There are codes for geography, seasonality (adjusted or not), consumer demographic profiles and a variety of consumption categories. (See, for example, the link to this glossary page.) These are the keys to understanding the actual values within this dataset.

For example, one major dimension of the data is captured by the attribute item_code. The survey breaks down consumption expenditures within the broad categories of Food, Housing, Apparel and Services, Transportation, Health Care, Entertainment, and Other. Within a category, there is also a rich structural breakdown. For xample, expenditures for Bakery Products within Food is given a code of FHC2.

But, nowhere are these codes defined or unlocked in the RDF datasets. This absence is true for virtually all of the datasets exposed on this wiki.

So, for literally billions of triples, and 8,000 attributes, we have ABSOLUTELY NO INFORMATION ABOUT WHAT THE DATA CONTAINS OTHER THAN A PROPERTY LABEL. There is much,much rich value here in data.gov, but all of it remains locked up and hidden.

The sad truth about this data release is that it provides absolutely no value in its current form. We lack the keys to unlock the value.

To be sure, early essential spade work has been done here to begin putting in place the conversion infrastructure for moving text files, spreadsheets and the like to an RDF form. This is yeoman work important to ultimate access. But, until a vocabulary is published that defines the attributes and their codes so we can unlock this value, it will remain hidden. And only when its further value (by connecting attributes and relations across datasets) through a schema of some nature is also published, the real value from connecting the dots will also remain hidden.

These datasets may meet the partial conditions of providing clickable URLs, but the crucial “useful information” as to what any of this data means is absent.

Every single dataset on data.gov has supporting references to text files, PDFs, Web pages or the like that describe the nature of the data within each dataset. Until that information is exposed and made usable, we have no linked data.

Until ontologies get created from these technical documents, the value of these data instances remain locked up, and no value can be created from having these datasets expressed in RDF.

The devil lies in the details. The essential hard work has not yet begun.

The NYT Case

Though at a much smaller scale with many fewer attributes, the NYT dataset suffers from the same failing: it too lacks a vocabulary.

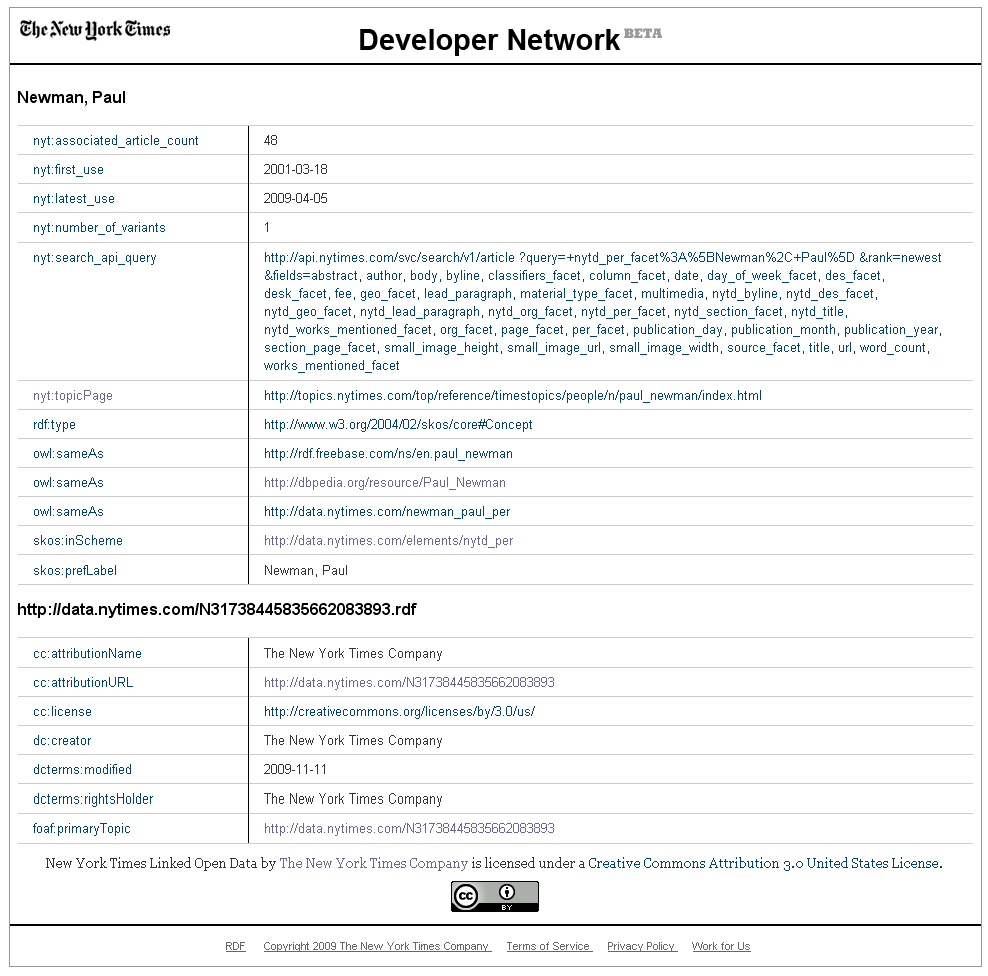

So, let’s take the case of one of the lead actors in The Hustler, Paul Newman, who played the role of “Fast Eddie” Felson. Here is the NYT record for the “person” Paul

Newman (which they also refer to as http://data.nytimes.com/newman_paul_per). Note the header title of Newman, Paul:

Click on any of the internal labels used by the NYT for its own attributes (such as nyt:first_use), and you will be given this message:

“An RDFS description and English language documentation for the NYT namespace will be provided soon. Thanks for your patience.”

We again have no idea what is meant by all of this data except for the labels used for its attributes. In this case for nyt:first_use we have a value of “2001-03-18”.

Hello? What? What is a “first use” for a “Paul Newman” of “2001-03-18”???

The NYT put the cart before the horse: even if minimal, they should have released their ontology first — or at least at the same time — as they released their data instances. (See further this discussion about how an ontology creation workflow can be incremental by starting simple and then upgrading as needed.)

Links to Other Things

Since there really are no links to other things on the Data-Gov Wiki, our focus in this section continues with the NYT dataset using our same example.

We now are in the territory of the fourth “rule” of linked data: 4. Include links to other URIs so that they can discover more things.

This will seem a bit basic at first, but before we can talk about linking to other things, we first need to understand and define the starting “thing” to which we are linking.

What is a “Newman, Paul” Thing?

Of course, without its own vocabulary, we are left to deduce what this thing “Newman, Paul” is that is shown in the previous screen shot. Our first clue comes from the statement that it is of rdf:type SKOS concept. By looking to the SKOS vocabulary, we see that concept is a class and is defined as:

A SKOS concept can be viewed as an idea or notion; a unit of thought. However, what constitutes a unit of thought is subjective, and this

definition is meant to be suggestive, rather than restrictive. The notion of a SKOS concept is useful when describing the conceptual or intellectual structure of a knowledge organization system, and when referring to specific ideas or meanings established within a KOS.

We also see that this instance is given a foaf:primaryTopic of Paul Newman.

So, we can deduce so far that this instance is about the concept or idea of Paul Newman. Now, looking to the attributes of this instance — that is the defining properties provided by the NYT — we see the properties of nyt:associated_article_count, nyt:first_use, nyt:last_use and nyt:topicPage. Completing our deductions, and in the absence of its own vocabulary, we can now define this concept instance somewhat as follows:

New York Times articles in the period 2001 to 2009 having as their primary topic the actor Paul Newman

(BTW, across all records in this dataset, we could see what the earliest first use was to better deduce the time period over which these articles have been assembled, but that has not been done.)

We also would re-title this instance more akin to “2001-2009 NYT Articles with a Primary Topic of Paul Newman” or some such and use URIs more akin to this usage.

sameAs Woes

Thus, in order to make links or connections with other data, it is essential to understand what the nature is of the subject “thing” at hand. There is much confusion about actual “things” and the references to “things” and what is the nature of a “thing” within the literature and on mailing lists.

Our belief and usage in matters of the semantic Web is that all “things” we deal with are a reference to whatever the “true”, actual thing is. The question then becomes: What is the nature (or scope) of this referent?

There are actually quite easy ways to determine this nature. First, look to one or more instance examples of the “thing” being referred to. In our case above, we have the “Newman, Paul” instance record. Then, look to the properties (or attributes) the publisher of that record has used to describe that thing. Again, in the case above, we have nyt:associated_article_count, nyt:first_use, nyt:last_use and nyt:topicPage.

Clearly, this instance record — that is, its nature — deals with articles or groups of articles. The relation to Paul Newman occurs as a basis of

the primary topic of these articles, and not a person basis for which to describe the instance. If the nature of the instance was indeed the person Paul Newman, then the attributes of the record would more properly be related to “person” properties such as age, sex, birthdate, death date, marital status, etc.

This confusion by NYT as to the nature of the “things” they are describing then leads to some very serious errors. By confusing the topic (Paul Newman) of a record with the nature of that record (articles about topics), NYT next misuses one of the most powerful semantic Web predicates available, owl:sameAs.

By asserting in the “Newman, Paul” record that the instance has a sameAs relationship with external records in Freebase and DBpedia, the NYT both entails that properties from any of the associated records are shared and infers a chain of other types to describe the record. More precisely, the NYT is asserting that the “thing” referred to by these instances are identical resources.

Thus, by the sameAs statements in the “Newman, Paul” record, the NYT is also asserting that that record is an instance of all these classes:

Furthermore, because of its strong, reciprocal entailments, the owl:sameAs assertion would also now entail that the person Paul Newman has the nyt:first_use and nyt:last_use attributes, clearly illogical for a “person” thing.

This connection is clearly wrong in both directions. Articles are not persons and don’t have marital status; and persons do not have first_uses. By misapplying this sameAs linkage relationship, we have screwed things up in every which way. And the error began with misunderstanding what kinds of “things” our data is about.

Some Options

However, there are solutions. First, the sameAs assertions, at least involving these external resources, should be dropped.

Second, if linkages are still desired, a vocabulary such as UMBEL [4] could be used to make an assertion between such a concept, and these other related resources. So, even though these resources are not the same, they are closely related. The UMBEL ontology helps us to define this kind of relation between related, but non-identical, resources.

Instead of using the owl:sameAs

property, we would suggest the usage of the umbel:linksEntity, which links a skos:Concept to related named entities resources. Additionally, Freebase, which also currently asserts a sameAs relationship to the NYT resource, could use the umbel:isAbout relationship to assert that their resource “is about” a certain concept, which is the one defined by the NYT.

Alternatively, still other external vocabularies that more precisely capture the intent of the NYT publishers could be found, or the NYT editors could define their own properties specifically addressing their unique linkage interests.

Other Minor Issues

As a couple of additional, minor suggestions for the NYT dataset, we would suggest:

- Create a foaf:Organization description of the NYT organization, then use it with dc:creator and dcterms:rightsHolder rather than using a literal, and

- The dual URIs such as “http://data.nytimes.com/N31738445835662083893” and “http://data.nytimes.com/newman_paul_per” are not wrong in themselves, but the purpose is hard to understand. Why does a single organization need to create multiple resources for the identical resource, when it comes from the same system and has the same purpose?

Re-visiting the Linkage “Rule”

There are very valuable benefits from entailment, inference and logic to be gained from linking resources. However, if the nature of the “things” being linked — or the properties that define these linkages — are incorrect, then very wrong logical implications result. Great care and understanding should be applied to linkage assertions.

In the End, the Challenge is Not Linked Data, but Connected Data

Our critical comments are not meant to be disrespectful and are not being picky. The NYT and TWC are prominent institutions for which we should expect leadership on these issues. Our criticisms (and we believe those of others) are also not an expression of a “trough of disillusionment” as some have been pointing out.

This posting is about poor practices, pure and simple. The time to correct them is now. If asked, we would be pleased to help either institution establish exemplar practices. This is not automatic, and it is not always easy. The data.gov datasets, in particular, will require much time and effort to get right. There is much documentation that needs to be transitioned and expressed in semantic Web formats.

In a broader sense, we also seem to lack a definition of best practices related to vocabularies, schema and mappings. The Berners-Lee rules are imprecise and insufficient as is. Prior best guidance documents tend to

be more how to publish and make URIs linkable, than to properly characterize, describe and connect the data.

Perhaps, in part, this is a bit of a semantics issue. The challenge is not the mechanics of linking data, but the meaning and basis for connecting that data. Connections require logic and rationality sufficient to reliably inform inference and rule-based engines. It also needs to pass the sniff test as we “follow our nose” by clicking the links exposed by the data.

It is exciting to see high-quality content such as from national governments and major publishers like the New York Times begin to be exposed as linked data. When this content finally gets embedded into usable contexts, we should see manifest uses and benefits emerge. We hope both institutions take our criticisms in that spirit.

problems listed herein, however, still pertain after these improvements.

2009-06-18. See http://www.w3.org/DesignIssues/LinkedData.html. Berners-Lee refers to the steps above as “rules,” but he elaborates they are expectations of behavior. Most later citations refer to these as “principles.”

http://www.cs.vu.nl/~pmika/swc/documents/Data-gov%20Wiki-data-gov-wiki-v1.pdf.

glenn mcdonald

November 16, 2009 — 2:43 pm

Very thoroughly explained. I’m glad you took the time to dig deep enough into a

data.gov example to actually *find* the glossary. I started to do that after reading

Stefano’s gripe, but ran out of time before I found anything useful!

I would emphasize even more, if it’s possible, your points about understanding what

the “things” are in a data set. Here I think Tim’s rules and the emphasis on external

URIs in Linked Data examples have actually been pushing in the wrong direction. My

first recommendation to anybody trying to model anything would be to start with

entirely internal IDs, and model the data that’s actually contained in your dataset,

before you start worrying about connecting to external data or aligning your concepts

with anybody else’s.

The NYT data set looks very much like it was put together the other way around,

starting with equivalences and then trying to hang everything else off of them. Model

it in the proper order and I think it would be obvious that the core concept is a

Reference, not a Person. From this it’s much clearer that a Reference *refers* to a

person, and that the NYT/Freebase relationship we’re actually after here is not that

the NYT reference is the same as the Freebase reference, but that they are both about

the same person.

In fact, if both NYT and Freebase used umbel:isAbout pointing to a normative URI for

the real person Paul Newman, then they would be inherently linked by virtue of their

data, instead of either side needing extra assertions to link them up. Moreover,

*every* dataset that used umbel:isAbout and the same celebrity URIs would be

inherently linked to any and every other one, without individual assertions anywhere.

This sounds backwards until you think about it: you get better linking if you

concentrate on saying what you’re saying, correctly, *instead* of concentrating

specifically on the linking.

Jim Hendler

November 16, 2009 — 2:55 pm

While I appreciate your long article, I’d like to mention that you have missed what we are doing at the http://data-gov.tw.rpi.edu site. We state this in various places in the wiki, but there’s a lot there, so I’m not surprised you didn’t see it – our goal is multi-step. First, we are doing a straight translation of the data to RDF. We purposely do NOT put any extermal links in this data, as there is a lot of danger in doing that – for example, you might think that two dataets with “expenditure” in them would be linkable, but in some expenditures are reported as positive numbers, in some negatives, in some the numbers are in millions, in some it is in billions. So we assign every datset its own URIs and its own internal links.

However, our index entry for each dataset includes a property that takes it to an extended index which we are working on now. In those fields we will provide links to the same datasets with typed values, with all same name properties linked as subproperties of those as main property types (i.e. we would have a property called “expenditures” which will have subproperties of the specific URIs for expenditures in the various Datasets. We will also be providing links to linked-data datasets (there are already some to DBpedia and to Geonames) and we are also working in linking these to some other datasets (like the ones on Supreme Court cases) that link in more interesting ways.

So we thank you for your notice, but point out that we are at the beginning of a long project, and there’s much exciting work to do (and not just by us, anyone else can create links between the datasets we’ve created, that’s why RDF is so valuable here – use our URIs and you don’t have to do your own data dump).

Finally, if you know anything about my work and my positions on the Semantic Web, you know that I have long been a proponent on the need to link the linked data – the Web in Semantic Web has been there from the very beginning, and the linking of datasets is described in the first major articles we wrote on Semantic Web (per se) – like the well-known Berners-Lee et al that appeared in Scientific American in 2001 (I’m the “et” in et al)

So you are right that we need more, but you should be assured it is coming.

Fred

November 16, 2009 — 3:41 pm

Hi Mr. McDonald,

I agree but lets take care about referring to internal/external IDs. I would refer to distinct “datasets” instead of internal/external data. The reason being that we can have accessible dataset (private with authentication or public), that are not linked with any other ones. So, we are really talking about linkage between datasets. So, I would rephrase your statement above as “start with URIs from a same dataset, model it properly (by defining and making accessible the ontologies/vocabularies), before start worrying about connecting to external…”

You are right.

I understand what you are saying: If we have a resource A that *knows* a resource B, then we know they are related in some ways (then they would be inherently linked by virtue of their data). That is right. But the usecase here is one of: different Agent creates datasets with different data records (resources). Some of these resources are related to some other resources from another dataset, but more in a relational way (without being the same!). This is what these UMBEL properties are about.

So I think there is a distinction to make here.

This would create really nice (and controlled (by the celebrity URIs)) reasoning cascade. Even if compelling, I don’t think this can properly work (at least, in the Open World; but this idea could certainly be leveraged (and create value) in closed system), because I don’t believe there will ever be any “celebrity URIs”. Some will be more prominent than others, but there won’t ever be a unique URI to identify a specific thing.

People want to be able to assert things they know, care about or know they are true (at least to themselves). It is one of the reason why multiple URIs will exists for the same (more or less) resource.

It can be good to have all these assertions made by different organizations. That way, you (as a user or data) can choose what assertion you trust from what organization. You have more flexibility that way, since I doubt anybody will really try to take all assertions made by everybody as true.

Thank you very much for this thoughtful comment, I certainly agree with the general idea and like some of the concepts you put forward!

Thanks,

Take care,

Fred

Fred

November 16, 2009 — 4:47 pm

Hi Mr. Hendler,

Thanks for taking time to comment on this blog post. So, lets me add a couple of things/observations regarding some parts of your comment.

I think the main point of this article hasn’t been properly understood (or was not clear enough). The point is not about external vs. internal linkage of resources. The point is to publish and link any resources that haven’t been meaningfully/carefully/properly describe in some kind of vocabularies/ontologies.

Publishing a dataset of billion of triples without any ontology defining how things have been described, has little, or no, value (at least to me) in itself for the only reason that such a dataset can’t be assessed, can’t be analyzed and so can’t be validated. Given these things we can’t do with it, it nearly has no value since no one could property assess it to try to leverage it to create an added value (linkage to external dataset, etc).

As we stated in the article: it is putting the “cart before the horse”.

I can be a fool, but to me, X number of hours to publish billion of triples that doesn’t have much (any?) meaning would be better spent at taking Y data.gov dataset, then properly creating (at least an initial) an ontology for each of them, and then to publish these Y number of datasets with their respective (and maybe even consolidated) ontologies. The number of triples won’t be as mind blowing as it currently is, but at least a valuable set of properly defined, reusable and valuable datasets would have been created and made public.

It is not about having the bigger car, but the more economic and performant one.

So, it is really a matter of process and steps. To me, for both projects (yours and the NYT) are lacking the first step: creating (at least a first version) of the ontology that describe these datasets. If this piece is missing, the value of these datasets (even if linked) are greatly diminished. It is like if any book author would publish the notepad of the thrillers one or two years before the thriller get published).

I think RDF (and related ontologies to come) is not valuable because we can make links between resources (it is good, but it is certainly not all). To me, RDF is valuable to data.gov for a simple and good reason: it is the more flexible description framework out there. Additionally, several tools already support it. RDF is valuable to data.gov because it gives the right framework to be able to convert any pieces (dataset) of data.gov under the same description framework, without loosing any information because of its lack of expressiveness. Even if different formats, different vocabularies, different serializations, different ontologies have been used by any of the agencies that participate to data.gov, we know their data can be expressed in RDF, without loosing any information about their data records.

Then, everybody can use any dataset only by using the same, canonical, format. However this beautiful aim can only be archieved if users know what the data means, and so the importance of the ontologies. If a Chinese sage tell me how to be millionaire in two days in Mandarin, it would only be valuable to me if I understand the Mandarin. Since I am not it is just sound coming-in by one ear, and going-out by the other.

Finally, I don’t think anybody can re-use any URI of any resource if they can’t understand the description of the resource referred by these URIs. Why would I take that blue pill if I don’t know/understand what it is, what it does, and how my body should react by ingesting it? I think the same applies here.

This is exactly the point of this article. It is not a matter of linking to link. It is a matter of defining what is linked before linking. There is is a process to follow, and we don’t think linking is the first one. Additionally, the famous “four rules” are incomplete, linking doesn’t add any value if linked resources are not defined. It is not because something as a URI accessible on the web that is is valuable. It is because something is meaningfully described, then accessible, and then linked to other meaningfully described resources. We stated that the rules #3 and #4 were potentially incomplete (or lack of specifics and guidance), now I am wondering if the order of the rules are right at all.

Things changes, ideas evolve and impact any Grand Vision. What was right and were appearing good in 2001 possibly changed in 2009…

Thanks!

Take care,

Fred

Evan Sandhaus

November 16, 2009 — 6:19 pm

Fred,

Thank you for the deep analysis of our first foray into linked open data. Thank you also for acknowledging that we have already done much at The New York Times to address the community’s feedback on our early efforts.

I hope that we’ll hear from you at the community we’ve set up at http://data.nytimes.com/community.

As for the points you raise. I’m admittedly new to data modeling, but I think of a SKOS concept as akin to an entry in an index (say at the back of your favorite non-fiction book). Suppose, for the sake of argument, that we’re talking about a book about the American revolutionary war. Suppose further that this book has an index, and that the index contains an entry for “Thomas Jefferson,” followed by the pages on which we can find information about him.

It seems to me that, in this context, we can view the index heading “Thomas Jefferson” as the actual human being Thomas Jefferson as well as a “subject heading” in an index. Moreover, the metadata that attaches to this resource (the page numbers in this case), can be viewed as being about the person “Thomas Jefferson” in that we can state: There is a Person Thomas Jefferson that is written about on pages x,y, and z of such and such book.

Though I realize there are strong arguments on both sides of the issue, this is the view I take in declaring a skos:concept resource to be owl:sameAs a person, place or thing, and I don’t anticipate the we will be changing this.

As for using the UMBEL vocabulary as an alternative. This vocabulary is a fantastic resource and I applaud all the effort you and Mike have put into it. Unfortunately, it is published under a Creative Commons Share Alike License. Because of this, were we to incorporate this vocabulary into our work, individuals and organizations making use of NYT linked data would be compelled to release their data under a specific type of license. This would almost certainly have the effect of limiting the appeal of our data.

Again, I really enjoy hearing feedback on our work, and encourage you and any other interested folks to join the conversation at data.nytimes.com/community.

All the best,

Evan Sandhaus

—

Evan Sandhaus

Semantic Technologist

New York Times Research Development

Fred

November 16, 2009 — 7:30 pm

Hi Mr. Sandhaus,

It is just normal to acknowledge any improvement of works that matter.

Before I continue commenting your comment, I just want to say the same thing I said to Mr. Hendler: the first point, and main one, is about the necessity to create and release the ontology used to describe these datasets. It is one of the first step (if not the first one) of this process (and not the last one as we say with these two main projects). Otherwise, the value of the published dataset are *greatly* depreciated, if not null.

It is much more about that fact, than any linkage specificities or issues. Linked data is not valuable if resources cannot be analyzed/understood. And all this seems to happen from time to time (like what you have done for the NYT) because of lack of clarity of the LD rules (now wondering if the rules are not only lacking clarity, but are not complete and even not well ordered).

General assumptions, early projects and old documentation can lead to this situation. There is no body to blame here, and the intention is not to blame anybody. I can appreciate that you have done your best, given the tools you had at hands, to make the best of NYT’s dataset.

This post, and a series of other ones that Mike publisher, and that I am publishing from time to time, tries to give our voice about these matters, things to consider, things to take care, methodologies to use to get the most of datasets, etc.

We hope this new post is just another one of that trend, trying to shake things up so that better and more valuable data get published.

Now that the main point has been discussed, lets discuss your other observations/comments!

Is the resource described at NYT a skos:Concept or a foaf:Person? It can’t be both. However, you can create two resources, one being a skos:Concept and one being a foaf:Person, and then link these two resources using a property like umbel:isAbout, or umbel:linksEntity.

I have no problem to create a resource, which is a skos:Concept, that is related to a named entity (without being a “person”; for whatever it means), and that you can use as such a “conceptual index about real world named entities”.

But this resource *is not* the human being, and if you create sameAs assertions to other resources that “means” to be it (like dbpedia or freebase resources), you are asserting that your conceptual resource is the same as the Person one for example (which is a false assertion).

That is fine, and this is really up to you and the NYT. I have no problem with that since anybody can choose to use, or not to use, the NYT dataset, or to drop false assertions from it.

This is an interesting comment. Could you please try to explain what you mean by this? I am not sure to understand all the implications of your statement, and this could be important (the reasons why you are not keen on using UMBEL) for the project.

Thanks for giving some more precise examples, and to elaborate on this point 🙂

Thanks for your comment, discussing these matters is always a pleasure!

Take care,

Fred

Denny Vrandecic

November 17, 2009 — 2:15 pm

Hi Mike, Hi Fred,

thank you for your interesting and thought-provoking post. I disagree on several points in your post.

* I disagree with the importance you put on the schema and mapping, and on your understanding of what a vocabulary is. You say: “At minimum, ANY dataset exposed as linked data needs to be described by a vocabulary. Both the NYT and RPI datasets fail on this score…” I add: every linked data set is necessarily described by a vocabulary, the vocabulary being simply the set of all used identifiers. As far as this goes, no dataset can fail on providing a vocabulary. The NYT and RPI datasets fail in providing a schema (i.e. the specification of the formal relations between the terms in the vocabulary), and you state that without this (and, even better, a mapping to other existing sources) the datasets are useless. I strongly disagree, on account of several arguments:

** It is the Semantic Web. This means you can just define the mapping yourself. But (in the case of the NYT) you can not provide the data yourself. They are genuinely opening a unique data source. Thus they add value to the Semantic Web that only they could add, which is great. Why scold them for not providing a formal schema on top of that?

** Just providing some formal mappings to other existing vocabularies is nice, but not a requirement for data publishing. When ATOM was created no one asked the standardization committee to provide an RSS translator.

** I disagree that the usefulness goes to nil without the schema (or mapping). I can go ahead, load the NYT data and some DBpedia data, and out of the box ask for all articles about liberal Jews. I don’t need no schema to do so. Data first. Schema second. That was also the main message of John Giannandrea’s keynote talk at ISWC2008 in Karlsruhe.

* You derive that the Paul Newman instance at NYT means “New York Times articles in the period 2001 to 2009 having as their primary topic the actor Paul Newman”. I disagree. The Paul Newman URI identifies the actual person, not a set of articles. An instance of person can be at the same time an instance of skis:Concept. Now we just have to be careful in defining the properties linking from nyt:newsman_paul_per: nyt:first_use means the date of the first article mentioning the subject, nyt:latest_use means the

* You say: “Thus, by the sameAs statements in the “Newman, Paul†record, the NYT is also asserting that that record is the same as these other things: owl:Thing, foaf:Agent, …”. This is wrong. By stating that their Paul Newman instance is the same as the Paul Newman instance on DBpedia and freebase they do not state that Paul Newman is the same as owl:Thing but rather that he is an instance of all the classes in this long list. And this does seem actually very fine.

Cheers,

denny

Ralph Hodgson

November 18, 2009 — 12:58 am

Enjoyed your article – I have similar motivations for understanding the semantics and provenance of data. This is why we have the oegov initiative – http://www.oegov.org

See also the post at Semantic Universe – http://www.semanticuniverse.com/topquadrant-monthly-column/group-blog-entry-oegov-open-government-through-semantic-web-technologies.

Ralpg

glenn mcdonald

November 18, 2009 — 12:16 pm

Giving a Person first_use and latest_use is bad data-modeling, pure and simple. To see why, imagine a bunch of newspapers wanting to get on board with the NYTimes effort and schema. So the Boston Globe publishes their corresponding data, and now you try to combine the NYT set and the Globe set, and you get:

paul_newman

– type: person

– first_use: 2001-03-18

– first_use: 1999-08-24

– latest_use: 2009-10-15

– latest_use: 2009-04-05

Now you’re screwed. You can’t tell which firsts go with which latests, so you have no idea what the spans are anymore, let alone which spans go with which publications. This data-model *has* to have an intermediary type to bundle the relationships together correctly:

paul_newman_in_nytimes

– type: reference

– about: paul_newman

– publication: nytimes

– first_use: 2001-03-18

– latest_use: 2009-04-05

paul_newman_in_boston_globe

– type: reference

– about: paul_newman

– publication: boston_globe

– first_use: 1999-08-24

– latest_use: 2009-10-15

Thus my earlier point about the importance of doing the data-modeling right, first…

Denny Vrandecic

November 18, 2009 — 12:26 pm

One more thing, in your answer to Evan you state:

“Is the resource described at NYT a skos:Concept or a foaf:Person? It can’t be both.”

I disagree. It can and actuall is both. Why is a skos:Concept owl:disjointWith foaf:Person?

Denny Vrandecic

November 18, 2009 — 12:36 pm

Glenn,

again, I disagree, it is not necessarily bad data modeling — you just have to be careful with how you define your property. If nyt:latest_mention means “the last time the New York Times had mentioned that subject”, then it is OK. It would be wrong for the Boston Globe to use the same property to state when they mentioned the subject the last time, though. But you are putting a meaning into the property and then you state that this meaning would be bad. You are fully right that with the meaning you give it, I indeed would be “screwed”.

So you can use the intermediare type as you suggest (and which is usually a good idea and a common pattern for describing n-aries), or you can internalize that within the property. But it is definitively not as wrong or bad as you state.

Cheers,

denny

Fred

November 18, 2009 — 1:13 pm

Hi Danny!

Thanks for adding your vision to this discussion.

This World is full of point of views 🙂

As Mike said, a property or type label doesn’t make doesn’t make it an ontology. An ontology is composed not only of textual description of properties and types concepts, but also a full of relationship between all these properties and types. Only using a property or type, without making accessible any ontology, make the published reosurces nearly useless because you don’t know how to use these properties and types.

If I send you a CSV file, with some column names… will you know if there is any relationship between any columns given the values of each record line? I doubt.

This is what we mean here.

This is the point, only publishing data for publishing data’s sake, doesn’t make it valuable. If there is no way to know how to interpret the data that is published, the value of the dataset is greatly diminished.

Also, we are not scolding any body here. We have been in contact with Even prior this blog post, then he told us to give some feedbacks if we had any. This is one of the reason why we wrote this blog post.

This is our vision, and our observations vis-a-vis what happen with the Linked Data Community. We are suggesting that the 4 rules are not properly ordered, and probably not complety. If things never get shaked for a reality check, how things can evolve?

Many people seems confortable with what they see with all this Linked Data stuff.

We are not. We are not comfortable with what we see, we see flaw, we see a lack of understanding, a lack of well defined documentation, a lack of well defined practices.

This is a reason why we wrote this blog post, and the reason why we will write more about this stuff.

So no, we are not “scolding” anybody, just talking about things we think are issues vis-a-vis our way to view Linked Data.

Well, maybe it could make a good demo. And then, could you do this with the RPI data?

So, you are suggesting that you can use all FOAF person properties to describe SKOS concepts, and all SKOS concepts properties to describe SKOS concepts? If you have an instance of the foaf:Person class that is an instance of the skos:Concept class, you can garantie me that everything is conceptually and semantically OK regarding current definition of the ontologies?

Right thanks! I just fixed this in the text, was not making sense. But still, this come back to the question above: can an instance of the class foaf:Person by an instance of the class skos:Concept. Personally I say no, you say yes. Well, considering the big thread about that on the dbpedia mailing list, I don’t think we will sort this out here 🙂

Thanks!

Take care,

Fred

Denny Vrandecic

November 18, 2009 — 1:39 pm

“If I send you a CSV file, with some column names… will you know if there is any relationship between any columns given the values of each record line? I doubt.”

I sure can guess since I have human level intelligence. Computers are indeed worse at guessing. And I agree that a schema and a mapping would be better. But would it be sufficient?

I disagree that without the schema there is no value in publishing the data. If I were hard-press I think I would define the increase in value by publishing a schema or a mapping by the number of additional inferences. This can be big, but not as big as you state. And this is obiously where we disagree 🙂

“So, you are suggesting that you can use all FOAF person properties to describe SKOS concepts, and all SKOS concepts properties to describe SKOS concepts?”

No, I am not. I am not saying that foaf:Person and skos:Concept are equal, I am just saying they are not disjoint. And cosidering there is that big discussion you are mentioning it is at least questionable if it is truly an error to state that Paul Newman is an instance of both classes as you said in your blog post.

glenn mcdonald

November 18, 2009 — 2:05 pm

Denny says:

If nyt:latest_mention means “the last time the New York Times had mentioned that subjectâ€, then it is OK.

No, that’s even worse data-modeling, sublimating a semantic relationship into the namespace prefix, where it can’t be queried.

Don’t defend this one. It’s just wrong.

Fred

November 18, 2009 — 2:09 pm

Hi Mr. Hodgson!

Thanks for sharing this initiative with us. First the actual link to your article is here(some funny things happened with thier URL).

After that, I would suggest people to read the ontologies that have been released so far.

So, if my understand is right after some pocking around, TopQuadrant is developing these upper layer ontologies that would be used as the foundational piece of eGouvernmental projects such as data.gov. These ontologies would be what ties all specific ontological modules from each different dataset. That way, we ensure to have a consistent whole between all governmental data publication projects.

Is that right?

Thanks

Take care

Fred

Evan Sandhaus

November 18, 2009 — 2:16 pm

Glenn,

Given your objection, I am interested to know how you would express the following relation.

A person/place/thing is first mentioned in The New York Times on date x/y/z.

I can see no way around defining a narrow predicate to express this concept.

Thanks so much,

Evan Sandhaus

—

Evan Sandhaus

Semantic Technologist

New York Times Research Development

@kansandhaus

Fred

November 18, 2009 — 2:28 pm

I think there is a big different between guessing and creating value…

The only ones that I know that created value out of guessing are the winners at some lotteries…

Well, no, not valuable, but something with minimal value is certainly not as soon as something valuable.

This is a matter of stressing out best practices. Isn’t a best practice?

So please re-read the available documentation around owl:sameAs. It was clear in OWL 1.1, it is still clear in OWL 2, and people just used it because they didn’t have anything else in hands and didn’t want to create any new properties for this purpose (which we did in UMBEL for that specific reason). Now philosophers started discussing on a thread about it, and nobody will ever have a definite answer about it. However, there is a definition of the semantic of the predicate, and using it everywhere for any purpose will just deprecate its usage on the long run. But, is this bad? Is this something we can prevent? Probably not. At the end, it is up to the data consumers to figure out what is good for themselves and what assertion they trusts. This is where the job will lies in the future. But in any case, there are some place to put best practices in place to help data publisher to create more value with what they publish. And we don’t think that the current ones that are following are the good ones. I don’t care who wrote what, if he is famous or not. We are only saying that there are practices that can be upgraded, enhanced and written. We are not followers of any dogma or preachers, we are only trying to create more valuable things.

Thanks!

Take care,

Fred

Fred

November 18, 2009 — 2:55 pm

Hi Evan,

I will give some comments too.

Well, more complex relationship needs more complex description. So, what about something like this? (there are million of ways to do this, so it is just another one).

You have a resource foaf:Person, then you have an event nyt:MentionInNYT (you can change the name of this event:Event class if you want 🙂 ) and you relate the person resource with the event resource by using the property nyt:firstMentioned. So, you would have something like this in N3:

<http://data.nytimes.com/N31738445835662083893> a foaf:Person ;

foaf:name “Paul Newman”

nty:mention <http://data.nytimes.com/abc/…/> .

<http://data.nytimes.com/abc/…/> a nyt:MentionInNYT ;

event:date “2001-03-18” .

<http://data.nytimes.com/xyz/…/> a nyt:MentionInNYT ;

event:date “2009-04-05” .

That way, you know exactly all the dates tha a Person resource has been mentined in some NYT articles. To get the first one, you check the dates, to get the last one, you check the last one.

Then you could enhance the description of these mention events to link them to the document resources where they are mentionned such as:

<http://data.nytimes.com/abc/…/> a nyt:MentionInNYT ;

nty:mentionedIn <http://data.nytimes.com/some-article/> .

event:date “2001-03-18” .

<http://data.nytimes.com/some-article/> a bibo:Document ;

dcterms:title “Some article titles” .

Etc. So, by creating such “mention” event, you can describe much more information about the link between a person resource and documents it has been mentionned in. Now, you have some kind of temporal dimension that you can leverage to generate better search, better browse and better visualisation of the your SAME data.

Thanks!

Take care,

Fred

Denny Vrandecic

November 18, 2009 — 4:41 pm

Glenn, Fred,

sorry, I still disagree. It is not “just wrong”. Yes, Fred’s later proposal is a possibility, but that’s it, just an alternative possibility to represent the same data.

All property instances can be reified by an individual representing that relationship. It is a design question as to whether to reify that relationship, or to use a plain property. Both alternatives have advantages and disadvantages. Upon a reified property you can always add further statements — but you could as well save the reification, and reify the statement on a when-needed base.

I will give an example:

The property foaf:schoolHomepage relates a foaf:Person with the homepage of the school they have attended. One could say: “Oh, wait, this is wrong — they should add a foaf:school property, that links to the school itself, and the school would have a foaf:homepage property, and it would allow us to add more data about the schools.”

But then — is the new foaf:school property really sufficient? Shouldn’t we instead add a foaf:SchoolAttendance class, that you instantiate, and that has properties like foaf:schoolAttendee, foaf:attendedSchool, foaf:attendanceStart, foaf:attendanceEnd, etc. This would allow us to add even more information to this event. Better even, we should generalize this class to foaf:Attendance, and only by the fact that foaf:attendedOrganization is a foaf:School we should derive that this is a school attendance.

But instead FOAF, a rather succesful vocabulary, and also used as an example by Fred and Mike previously, choose not to do so. Why? I assume due to pragmatic reasons (like having one triple instead of, uhm, many, and the fact that most schools simply didn’t have URIs back then), but we would have to ask DanBri or Libby on that.

The nice thing on the Semantic Web is that you can add further semantics to it. You can simpy define that

foaf:schoolHomepage = foaf:school o foaf:homepage

with = being property equality and o being property chaining, both possible in OWL2. Furthermore, since foaf:homepage is an IFP, we can actually get hold of the school individual and make more statements about it.

The same approach applies to the NYT data (although I am not sure OWL2 is sufficient here).

I don’t say that what NYT did is good or bad. It is a design decision, and one they made their way. As I have shown one can translate lossless from one design to the other, and thus they have chosen a more pragmatic approach that gets the job done. It sure has less triples than Fred’s solution, which is also a perfectly valid and working solution.

I hope I at least convinced you that it not “just wrong”, but that the approach is a valid possibility.

Cheers,

denny

Denny Vrandecic

November 18, 2009 — 4:57 pm

Fred,

“The only ones that I know that created value out of guessing are the winners at some lotteries…”

I challenge you to provide me a definition of Paul Newman in your suggested vocabulary that does not involve some guessing. A definition like “Paul Newman (born 1937), the American linguist of great influence in the study of African languages” involves a lot of guessing about the meaning of terms like African, language, influence, etc.

This guessing is basically the same guessing that allowed me to guess that nyt:newman_paul_per is actually not the linguist, but the actor. The owl:sameAs relation to DBpedia allowed me to confirm that guess, since due to it I know that this individual was also an instance of the classes Jew, Actor, etc.

“So please re-read the available documentation around owl:sameAs.”

I actually did. And I still think that the statement

nyt:newman_paul_per owl:sameAs dbpediaresource:Paul_Newman

does not mean that I can “use all FOAF person properties to describe SKOS concepts, and all SKOS concepts properties to describe SKOS concepts”, as you have stated. It only means that these two identifieres refer to the same resource (which seems correct to me), and that it does not make any statement about the usage of SKOS concept properties or FOAF person properties.

By the way, as I already said to Mike, thanks for the interesting discussion, which also makes my own understanding of the concepts clearer — cheers,

denny

glenn mcdonald

November 18, 2009 — 5:02 pm

Evan:

You don’t need a narrow predicate. In fact, as with many data-modeling problems, this one actually gets easier if you take a broader perspective. Imagine that you are not just establishing a schema for your own data, but establishing a model schema that other newspapers could use for their corresponding data. And in particular imagine that somebody will want to *combine* your data with the corresponding data from other newspapers.

Thus the model I suggested in an earlier comment:

N31738445835662083893

– type: reference

– about: paul_newman

– publication: nytimes

– first_use: 2001-03-18

– latest_use: 2009-04-05

paul_newman

– sameAs: dbpedia.org/resource/Paul_Newman

– sameAs: rdf.freebase.com/ns/en.paul_newman

That is:

– change your Person nodes into References

– link each Reference to a Person URI, using whatever “about” predicate you like

– move your owl:sameAs statements to equate Persons with the corresponding Freebase/dbpedia URIs.

Or, even simpler, you could just *use* the dbpedia URIs, instead of having your own URIs for people. So this:

N31738445835662083893

– type: reference

– about: dbpedia.org/resource/Paul_Newman

– publication: nytimes

– first_use: 2001-03-18

– latest_use: 2009-04-05

After all, the people are not unique to the Times, so there’s no real point to having times-specific URIs for them, unless you have a lot of people who don’t have dbpedia.org URIs (which I didn’t check, so maybe you do).

You can insert the “publication” or not, that’s up to you. Clearly it’s implicit in your data, for your own purposes, but anybody trying to merge your data with other newspapers’ data is going to need it, so you might as well help them out. In fact, since you’re establishing a precedent that other newspapers are (hopefully?) (likely?) to follow, including that detail in your model will help later integrators out a *lot*.

Fred

November 18, 2009 — 5:03 pm

Don’t be sorry, it is okay 🙂

We won’t start to get lost on discussion about good and bad design, it is becoming out of scope of this thread.

However, the conversation was really about best practices and the availability of an ontology (start simple and grow it over time).

Maybe for the use of property A or B: as I said, there are million of ways. But if not releasing the ontology at the same time as the data is a design decision, this is bad design to me. What is your definition of pragmatic? Why NYT’s design decisions are pragmatics and not mine? Pragmatic things cannot be a little bit more complex? Pragmatic doesn’t mean over-using a specified terms (not by me, but by a W3C commity), and not publishing ontologies of published data.

It is not a question of pragmatic design considerations or not, it is a matter of good use of existing terms, creation of new terms, and if new terms are created, then to publish, at least, some initial ontologies/vocabularies around these terms.

If people don’t do that, I don’t personally care. One thing this post is about is making people aware of these considerations because each day I read people that are lost in the Linked Data space… and there is a reason, and this is one of the reason.

If a normal rules and guidelines are not followed, if value is lacking, if… I don’t care, I only have to ignore these datasets. But some people do care about this stuff, and some people that publish some datasets wonder how they should proceed. What we are trying to do is to help them making their work better and more valuable.

People are not satisfied with the same things. And we are not satisfied with the current state of the Linked Data principles, so it is why we write about this stuff.

Did I say it was wrong? I think I said it was not as valuable as it could be 🙂

Thanks!

Take care,

Fred

Fred

November 18, 2009 — 5:10 pm

Hi Denny!

Okay, I think this certainly gives a good conclusion to this conversation and some kind of “agreement” 🙂

Do we agree? 🙂

Thanks,

Take care,

Fred

glenn mcdonald

November 18, 2009 — 5:13 pm

Denny:

No, this person/reference thing really is a modeling error. That doesn’t inherently make it a bad *publishing* decision. It’s certainly fairly common to make deliberate modeling errors in order to live with simpler structures that are adequate for some particular purpose. The NYTimes model, as is, is adequate in isolation.

But to the extent that this data is supposed to be mixable with other data (it’s Linked Data, not just Open Data), and especially to the extent that the Times is leading by example, and thus establishing an example that other newspapers might follow, then this data-model is *not* just going to live in isolation, and thus this particular error matters.

And it’s eminently and easily fixable, after all. Doing the model correctly is not appreciably harder or worse for the Times, and it makes the data more usable, not less. So there’s really no tradeoff here.

Denny Vrandecic

November 18, 2009 — 5:19 pm

Hi Fred!

I think we do 🙂

Cheers,

denny

glenn mcdonald

November 18, 2009 — 5:22 pm

Evan, Fred:

Fred suggests ditching the first_use/latest_use entirely, and just deriving them by querying the articles’ dates. But this is really two separate issues:

1. It would definitely be cooler to expand the model to include all the individual articles, with their individual attributes. But obviously this is non-trivial work for you.

2. But even if you did that, it’s very reasonable to have your data-model also go ahead and represent, directly, some extra things (article count, first use, latest use) that *could* be queried, precisely so that they don’t have to be queried. There’s a minor data-redundancy argument against doing this, but it only really applies if you’re *maintaining* the dataset that way, so in this case where the RDF is being (I assume) generated out of some other database of record, it doesn’t matter.

Denny Vrandecic

November 18, 2009 — 5:47 pm

Glen,

“No, this person/reference thing really is a modeling error.”

Sorry, now I am confused. Can you please explain what the error is?

glenn mcdonald

November 18, 2009 — 5:58 pm

See http://fgiasson.com/blog/index.php/2009/11/16/when-linked-data-rules-fail/#comment-286267, above!

Denny Vrandecic

November 18, 2009 — 6:27 pm

Glenn,

that is what I tried to argue with the foaf:schoolHomepage example. You can reify the relation as you suggest, but you don’t have to.

Basically, the following rules resolves the formal relations between your suggestion (using the prefix glenn:) and the current NYT export (using the prefix nyt:) :

nyt:last_use(x,y) = glenn:about(z,x) & glenn:last_use(z,y) & glenn:publication(z,glenn:nytimes)

nyt:first_use(x,y) = glenn:about(z,x) & glenn:first_use(z,y) & glenn:publication(z,glenn:nytimes)

Sorry, it isn’t pretty (the rule is given in datalog syntax, and I am not sure if it can be represented in OWL2 (would need to ask the OWL2 crowd), but it works in RIF. z would be the newly introduced, reifying individual in your solution)

This solution shows that both modeling approaches can have equivalent semantics, thus I find it hard to regard either one as an error (just as I don’t say that the semantics of foaf:schoolHomepage is an error. It’s a choice)

Adding this axiom to a SPARQL endpoint with an appropriate entailment regime will yield the same results wether you use your RDF or the NYT one (besides URI naming).

Sorry for the technicality.

glenn mcdonald

November 18, 2009 — 7:09 pm

Denny:

Yes, that’s a formalization of the transformation from this wrong model to the right one. But it would only work if each newspaper used a unique predicate, which is exactly counter to the idea of common ontologies.

But again, getting the model right is easy, and has really no bad effects on anything, so I see no practical point in defending the existing model, at all. Much less in proposing, to an organization earnestly and admirably trying to just get the Linked Data basics right, some hypothetical solution combining a needlessly bad data-model with an inferrence infrastructure they don’t have and have probably never even heard of!

Denny Vrandecic

November 18, 2009 — 7:43 pm

Hi Glenn,

I think I finally got it — we had a different understanding of the term “model”. I was using the technical term as in “model-theory”, in which case both RDF sets actually are fulfilled by the same model (and thus one cannot be bad and the other good). You were refering to the concrete representation of this in RDF triples. Sorry for my confusion.

“But it would only work if each newspaper used a unique predicate”

Correct.

“which is exactly counter to the idea of common ontologies.”

Mostly correct. I do think that on the SemWeb everyone should be able to extend their ontologies. In that case — and now we come full circle to Fred’s original point — it would be really helpful to formally define that extension with a schema and a mapping. It would make sense to have a generic

news:last_use

property that is somehow linked to nyt:last_use, e.g. like this:

nyt:last_use rdf:type news:last_use .

nyt:last_use news:for_paper glenn:nytimes .

and thus can again relate the common ontology to that one extended by the NYT (This is meant as plain RDF, but is also OWL2 compatible. Yay! And it needs no RIF semantics and inference structure).

In SPARQL it enables the very same queries that are enabled in your system, but quite a number of triples and individuals can be saved in the serialization of the data.

The only reason I am defending the nyt:last_use property as it is, is because I deem it both elegant and correct. We obviously disagree on the “elegant” part.

For an example that actually went wrong, see what linkedgeodata.org is doing. If you use the REST API to query for info about a specific point, like here:

http://linkedgeodata.org/triplify/near/51.033333,13.733333/1000

it contains a triple like this:

node:367589550#id lgeo:distance “954” .

The subject is a location, and it has the distance 954. This is calculated with regards to request you sent, i.e. if you move the requested location around, the distance will change (I pointed them to the bug a few weeks ago, so I don’t feel bad about disclosing it publicly anymore 🙂

This again is elegant (in my opinion), but it is wrong, and there is no way it can be remedied i.e. turned into an acceptable semantics. Here the model is wrong. Whereas at the NYT example we are just speaking about a syntactic, or rather representational issue that can be resolved with a formal specifiable semantics.

So, this is just to explain my misunderstanding, and to why I defended the property as is. I do understand and appreciate your point and regard it as a valid alternative.

Best,

denny

David

February 18, 2010 — 2:25 am

Fred –

Very interesting… haven’t fully read your entire article or all the comments yet…

At the moment I’m going to side with Jim Hendler’s remarks about his project is the FIRST STEP in a long, long journey.

First you have to expose the data to start seeing the discrepancies… some folks report “expenditures” as positive & others as negatives. Who would have thought?

My analogy(s)

– in the physical world there are multiple constituencies (the dairy, the brand aggregator, the distributor, the local food inspector, the grocery store manager, the customer) to make sure that when you go to the grocery store, you ALWAYS find milk in milk jugs. You NEVER find orange juice or motor oil in milk jugs.

We’re not even remotely close to such congruence (e.g. expectations & reality) in the data realm. It’s Tuesday? Well, that must mean we’ll find my mail in your post box.

In the early days of the Industrial Age in the UK, it took 75 years to agree on screw thread standards. And there are still 5 standard threads in the UK today, 250 years later.

First step is to make the data visible… make it possible for MANY eyeballs to look at this crap.

Once people start SEEING that my FOOBAR is your FUBAR then we can move forward with figuring out what to do.

It’s going to be a long, slow process.

Looking at it from another angle… have you ever worked with an organization with SERIOUS data quality issues? What’s the organizational response when you try, ever so diplomatically, to point out data quality issues? Ever tried to talk to a senior manager about data quality issues?

People who work with data know it’s often incomprehensible crap… and they’ve long since learned to keep their personal observations to themselves, even though the bad data is clearly costing the organization serious money.

Just my two cents…