Preamble

As I explained in my latest blog post, I am now starting to talk about a couple of things I have been working on in the last few months that will lead to a release, by Structured Dynamics, in the coming months. This blog post is the first step into that path. Enjoy!

Introduction

I have been working with RDF, SPARQL and triple stores for years now. I have created many prototypes and online services using these technologies. Having the possibility to describe everything with RDF, and having the possibility to index everything in a triple store that you can easily query the way you want using SPARQL, is priceless. Using RDF saves development and maintenance cost because of the flexibility of store (triple store), the query language (SPARQL), and associated schemas (ontologies).

However, even if this set of technologies can do everything, quickly and efficiently, it is not necessarily optimal for all tasks you have to do. As we will see in this blog post, we use RDF for describing, integrating and managing any kind of data (structured or unstructured) that exists out there. RDF + Ontologies are what we use as the canonical expression of any kind of data. It is the triple store that we use to aggregate, index and manage that data, from one or multiple data sources. It is the same triple store that we use to feed any other system that can be used in our architecture. The triple store is the data orchestrator in any such architecture.

In this blog post I will show you how this orchestrator can be used to create Solr indexes that are used in the architecture to perform three functions that Solr has been built to perform optimally: full-text search, aggregates and filtering. So, while a triple store can perform these functions, it is not optimal for what we have to do.

Overview

The idea is to use the RDF data model and a triples store to populate the Solr schema index. We leverage the powerful and flexible data representation framework (RDF), in conjunction with the piece of software that lets you do whatever you want with that data (Virtuoso), to feed a carefully tailored Solr schema index to optimally perform three things: full-text search, aggregates and filtering. Also, we want to leverage the ontologies used to describe this data to be able to infer things vis-à-vis these indexed resources in Solr. This leverage enables us to use inference on full-text search, aggregates and filtering, in Solr! This is quite important since you will be able to perform full text searches, filtered by types that are inferred!

Some people will tell me that they can do this with a traditional relational database management system: yes. However, RDF + SPARQL + Triple Store is so powerful to integrate any kind of data, from any data sources; it is so flexible that it saves precious development and maintenance resources: so money.

Solr

What we want to do is to create some kind of “RDF” Solr index. We want to be able to perform full-text searches on RDF literals; we want to be able to aggregate RDF resources by the properties that describe them, and their types; and finally we want to be able to do all the searches, aggregation and filtering using inference.

So the first step is to create the proper Solr schema that will let you do all these wonderful things.

The current Solr index schema can be downloaded here. (View source if simply clicking with your browser.)

Now, let’s discuss this schema.

Solr Index Schema

A Solr schema is composed of basically two things: fields and type of fields. For this schema, we only need two types of fields: string and text. If you want more information about these two types, I would refer you to the Solr documentation for a complete explanation of how they work. For now, just consider them as strings and texts.

What interests us is the list of defined fields of this schema (again, see download):

- uri [1] – Unique resource identifier of the record

- type [1-N] – Type of the record

- inferred_type [0-N] – Inferred type of the record

- property [0-N] – Property identifier used to describe the resource and that has a literal as object

- text [0-N] (same number as property) – Text of the literal of the property

- object_property [0-N] – Property identifier used to describe the resource where the object is a reference to another resource and that this other resource can be described by a literal

- object_label [0-N] (same number as object_property) – Text used to refer to the resource referenced by the object_property

Full Text Search

A RDF document is a set of multiple triples describing one or multiple resources. Saying that you are doing full-text searches on RDF documents is certainly not the same thing as saying that you are doing full-text searches on traditional text documents. When you describe a resource, you rarely have more than a couple of strings, with a couple of words each. It is generally the name of the entity, or a label that refers to it. You will have different numbers, and sometimes some description (a short biography, or definition, or summary, as examples). However, except if you index an entire text document, the “textual abundance” is quite poor compared to an indexed corpus of documents.

In any case, this doesn’t mean that there are no advantages in doing full-text searches on RDF documents (so, on RDF resource descriptions). But, if we are going to do so, let’s do so completely, and in a way that meets users’ expectations for full-text document search. By applying this mindset, we can apply some cool new tricks!

Intuitively the first implementation of a full-text search index on RDF documents would simply make a key-value pair assignment between a resource URI and its related literals. So, when you perform a full-text search for “Bob”, you get a reference on all the resources that have “Bob” in one of the literals that describe these resources.

This is good, but this is not enough. This is not enough because this breaks the more basic behavior for any users that uses full-text search engines.

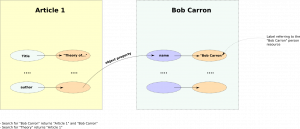

Let’s say that I know the author of many articles is named “Bob Carron”. I have no idea what are the titles of the articles he wrote, so I want to search for them. With the system exposed above, if I do a search for “Bob Carron”, I will most likely get back as a result the reference to “Bob Carron”, the author person. This is good, but this is not enough.

On the results page, I want the list of all articles that Bob wrote! Because of the nature of RDF, I don’t have this “full-text” information of “Bob” in the description of the articles he wrote. Most likely, in RDF, Bob will be related to the articles he wrote by reference (object reference with the URIs of these articles), i.e., <this-article> <author> <bob-uri>. As you can notice, we won’t get back any articles in the resultset for the full-text query “Bob Carron” because this textual information doesn’t exist in the index at the level of the articles he wrote!

So, what can we do?

A simple trick will beautifully do the work. When we create the Solr index, what we want is to add the textual information of the resources being referenced by the indexed resources. For example, when we create the Solr document that describes one of the articles written by Bob, we want to add the literal that refers to the resource(s) referenced by this article. In this case, we want to add the name of the author(s) in the full-text record of that article. So, with this simple enhancement, if we do a search for “Bob Carron”, we will now get the list of all resources that refers to Bob too! (articles he wrote, other people that know him, etc).

So, this is the goal of the “object_property” and “object_label” fields of the Solr index. In the schema above, the “object_property” would be “author” and the “object_label” would be “Bob Carron”. This information would belong to the Solr document of the Article 1.

Full Text Search Prototype

Let’s take a look at the prototype running system (see screen capture below).

The dataset loaded in this prototype is Mike’s Sweet Tools. As you notice in the prototype screen, many things can be done with the simple Solr schema we published above. Let’s start with a search for the word “test”. First, we are getting a resultset of 17 things that have the “test” word in any of their text-indexed fields.

What is interesting with that list is the additional information we now have for each of these resultsets that come from the RDF description of these things, and the ontologies that have been used to describe them.

For example, if we take a look at Result #4, we see that the word “test” has been found in the description of the Ontology project for the “TONES Ontology Repository” record. Isn’t that precision far more useful than saying: the word “test” has been found in “this webpage”? I’ll let you think about it.

Also, if we take a look at Result #1, we know that the word “test” has been found in the homepage of the Data Converter Project for the”Talis Semantic Converter” record.

Additionally, by leveraging this Solr index, we can do efficient aggregates on the types of the things returned in the resultset for further filtering. So, in the section “Filter by kinds” we know what kinds of things are returned for the query “test” against this dataset.

Finally, we can use the drop-down box at the right to do a new search (see screenshot), based on the specific kind of things indexed in the system. So, I could want to make a new search, only for “Data specification projects” with the keyword “rdf”. I already know from the user interface that there are 59 such projects.

All this information comes form the Solr index at query time, and basically for free by virtue of how we set up the system. Everything is dynamically aggregated and displayed to the user.

However, there are a few things that you won’t notice here that are used: 1) SPARQL queries to the triple store to get some more information to display on that page; 2) the use of inference (more about it below), and; 3) the leveraging of the ontologies descriptions.

In any case, on one of SD’s test datasets of about 3 million resources, such a page is generated within a few hundred milliseconds: resultset, aggregates, inference and description of things displayed on that page. This same 3 million resources that returns results in a few hundred milliseconds did so on a small Amazon EC2 server instance for 10 cents per hour. How’s that for performance?!

Aggregates and Filtering on Properties and Types

But, we don’t want to merely do full-text search on RDF data. We also want to do aggregates (how many records has this type, or this property, etc.) and filtering, at query time, in a couple of milliseconds. We already had a look at these two functions in the context of a full-text search. Now let’s see it in action in some dataset prototype browsing tools that uses the same Sweet Tools dataset.

In a few milliseconds, we get the list of different kind of things that are indexed in a given dataset. We can know what are the types, and what is the count for each of these types. So, the ontologies drive the taxonomic display of the list of things indexed in the dataset, and Solr drives the aggregation counts for each of these types of things.

Additionally, the ontologies and the Virtuoso inference rules engine are used to make the count, by inference. If we take the example of the type “RDF project”, we know there are 49 such projects. However, not all these projects are explicitly typed with the “RDF project” type. In fact, 7 of these “RDF project” are “RDF editor project” and 6 are “RDF generator project”.

This is where inference can play an important role: an article is a document. If I browse documents, I want to include articles as well. This “broad context retrieval” is driven by the description of the ontologies, and by inference; this is the same thing for these projects; and this is the same thing for everything else that is stored as structured RDF and characterized by an ontology.

The screenshot above shows how these inferences and their nestings could present themselves in a user interface.

Once the user clicks on one of these types, he starts to browse all things of that type. On the next screenshot below, Solr is used to add filters based on the attributes used to describe these things.

In some cases, I may want to see all the Projects that have a review. To do so, I would simply add this filter criteria on the browsing page and display the “Projects” that have a “review” of them. And thanks to Solr, I already know how many such Projects have reviews, right before even taking a look at them.

Note, then, on this screenshot that the filters and counts come from Solr. The list of the actual items returned in the resultset comes from a SPARQL query, and the name of the types and properties (and their descriptions) come from the description of the ontologies used.

This is what all this stuff is about: creating a symbiotic environment where all these wonderful systems live together to do the effective management of the structured data.

Populating the Solr Index

Now that we know how to use Solr to perform full-text searches, and the aggregating and filtering of structured data, one question still remains: how do we populate this index? As stated at above, the goal is to manage all the structured data of the system using a triple store and ontologies. Then it is to use this triple store to populate the Solr index.

Structured Dynamics uses the Virtuoso Open Source as the triple store to populate this index for multiple reasons. One of the main ones is for its performance and its capability to do efficient basic inference. The goal is to send the proper SPARQL queries to get the structured data that we will index in the Solr schema index that we talked about above. Once this is done, all the things that I talked about in this blog post become possible, and efficient.

Syncing the Index

However, in such a setup, we have to keep one thing in mind: each time the triple store is updated (a resource is created, deleted or updated), we have to sync the Solr index according to these modifications.

What we have to do is to detect any change in the triple store, and to reflect this change into the Solr index. What we have to do is to re-create the entire Solr document (the resource that changed in the triple store) using the <add /> operation.

This design raises an issue with using Solr: we cannot simply modify one field of a record. We have to re-index the entire description of the document even if we want to modify a single field of any document. This is a limitation of Solr that is currently addressed in this new feature proposition; but it is not currently available for prime time.

Another thing to consider here is to properly sync the Solr index with any ontology changes (at the level of the class description) if you are using the inference feature. For example, assume you have an ontology that says that class A is a sub-class-of class B. Then, assume the ontology is refined to say that class A is now a sub-class-of class C, which itself is a sub-class-of class B. To keep the Solr index synced with the triple store, you will have to perform all modifications that affect all the records of these types. This means that the synchronization doesn’t only occur at the level of the description of a record; but also at the level of the changes in the ontologies used to describe those records.

Conclusion

One of the main things to keep in mind here is that now, when we develop Web applications, we are not necessarily talking about a single software application, but a group of software applications that compose an architecture to deliver a service(s). In any such architecture, what is at the center of it is Data.

Describing, managing, leveraging and publishing this data is at the center of any Web service. It is why it is so important to have the right flexible data model (RDF), with the right flexible query language (SPARQL), and the right data management system (triple store) in place. From there, you can use the right tools to make it available on the Web to your users.

The right data management system is what should be used to feed any other specific systems that compose the architecture of a Web service. This is what we demonstrated with Solr; but it is certainly not limited to it.

Fabian

April 30, 2009 — 10:33 am

Salut Frederick,

Thanks for the post.

You talk about a solution with Solr to do ‘full-text search, aggregates and filtering’

What are the limitation of ‘full-text’ searches with SPARQL REGEX ?

Fabian

Fred

April 30, 2009 — 2:56 pm

Hi Fabian!

It is a question of performance. Filters restrict the solution of a given graph pattern. This is much slower (particularly when you have million of triples) than traditional full-text search index.

Also note that OpenLink did enhance their SPARQL implementation to include a new term “bif:contains” which does full-text search within SPARQL queries (more than a year ago). However this is only supported by OL as we talk, so I needed something that could be used by any triple store.

Possibly that other RDBMS based triple stores does this; but the full-text performances of mysql (for example) can’t be compared to Lucene’s when come the time to full-text searches.

It is for these reasons that I investigated this possibly solution.

In any case, what is important to keep in mind is one thing: using triples stores to feed dedicated, specialized services. Structured data management is made easy with a triple store that understand RDF SPARQL SPARUL 🙂

Thanks for your interest in this stuff!

Take care,

Fred

Kingsley Idehen

April 30, 2009 — 5:16 pm

Fred,

The Virtuoso 6 Cluster instance [1] that hosts *most* of the existing data sets from the LOD Cloud [2](plus other data sources) does indeed not only demonstrate “Full Text” search using SPARQL, it also demonstrates the use of Aggregates to facilitate Faceted Browsing over extremely large data sets (we are at 4.5 Billion triples and counting).

While the UI still remains a little quirky (but not for long), it is also important to note that we expose a RESTful service into the Faceted Browser Engine[3]. Thus, the generic layer you are developing atop RDF stores in general also benefits from using our REST API to get the facets you want (i.e. you let the server do the work in the case of Virtuoso once you know you that you are taking to a Virtuoso 6 based endpoint).

Links:

1. http://lod.openlinksw.com

2. http://www4.wiwiss.fu-berlin.de/bizer/pub/lod-datasets_2009-03-27.html

3. http://virtuoso.openlinksw.com/dataspace/dav/wiki/Main/VirtuosoFacetsWebService

4. http://lod.openlinksw.com/facet_doc.html

5. http://lod.openlinksw.com/void/Dataset.

Kingsley

Edoardo Marcora

August 10, 2009 — 11:56 am

Thanx for sharing your ideas!

I have one question: how do you go about, using your example data, filtering articles through more than one property of their authors, using only Solr. For example, search for all articles whose author name contains Bob and live in Canada (assuming authors have a country property).

Thanx,

Dado

Fred

August 11, 2009 — 2:34 pm

Hi Edoardo!

First, I think you could be interested in this thread on the Semantic Web Drupal group page.

About structWSF’s Solr instance: what is happening with it? Well, it is really just a fulltext Solr index which leverage the structured content that it ingest. This means that you can filter by type (with inference), and by properties. However, you don’t have the flexibility that you have (at least, with the current Solr index used) to do this kind of search using Solr. This is really what SPARQL is about. If you want to perform this kind of searches on your system (lets say, some Drupal module you want to create that interact with a structWSF instance), then you would probably use the SPARQL endpoint to do such queries.

Otherwise, the current index schema doesn’t accommodate this kind of queries to Solr. Dynamics properties would be needed, and more complex Solr queries. The question would be: how Solr would behave with big datasets with non-heterogeneous dataset(s) (hundred of existing properties)?

The current goal by using Solr is to enable the fastest fulltext searches possible over a set of structured (rdf) datasets while leveraging some of its structure for leveraging inference and & faceting.

Hope it helps!

Thanks!

Take care,

Fred

John D

September 3, 2009 — 5:03 pm

Hi Fred,

The link to the solr schema files seems to be broken. Is there somewhere we can download this?

Thanks,

John

Fred

September 4, 2009 — 11:29 am

Hi John!

Yes, you can get it from here:

http://code.google.com/p/structwsf/source/browse/trunk/framework/solr_schema.xml

This is the latest version available right now (and the trunk get always updated, so check that URL for the latest version).

Hope this help,

Thanks!

Fred

Lau

February 22, 2010 — 11:11 am

Hi Fred,

What i am concerned about is, if this tech can provide the user-experience as same as that offered by traditional search engine(google or yahoo, for instance). And what about the full-text search on RDF about chinese or japanese characters?

Thanks,

Lau

Fred

February 22, 2010 — 12:18 pm

Hi Lau!

All full-text search capabilities of this method are supported by Solr itself. Chinese charsets are supported by Solr, the only thing we should check is if there is any Solr “Chinese” text-analyser out there. If there is, then we shouldn’t have any issues using this architecture to support Chinese languages.

Does that answer your question?

Thanks!

Take care,

Fred

Thomas Francart

May 19, 2010 — 5:33 am

Hi Fred

This is really interesting. Just to let you know that the link to the solR schema is broken, and I think it is replaced by this one :

http://code.google.com/p/structwsf/source/browse/trunk/framework/solr_schema_v1_1.xml

What does it cost to integrate structWSF with another triplestore/sparql endpoint than Virtuoso ? say, Sesame for example ?

All the best

Thomas

Fred

May 19, 2010 — 7:42 am

Hi Thomas!

Yes exactly, this is the latest Solr schema used by structWSF. The cost to change from Virtuoso to Sesame is to check is sesame can handle all the sparql queries generated by any structWSF endpoints. If it doesn’t for one, then a layer should be created to send different queries depending on the underlying store. Such a layer doesn’t currently exists, but should easily be implementable. Finally, one of the goal of structWSF was to create a WS abstraction so that we can use (and switch) any underlying system without impacting the capabilities of the network. It is the reason why we choose to use Solr for the search & browse activities, however if a triple store (or when) will be as effective as this for searching & faceting, then we will be able to switch from Solr to this new triple store (at the end, it would be much easier to maintain with a triple store than Solr because of some create/update limitations of Solr).

Hope it helps, and don’t hesitate to send me any question related to structWSF/Sesame (by email, on this blog, or on the mailing list).

Thanks!

Take care,

Fred

Svx

March 3, 2017 — 3:47 pm

Hi there,

Did you shut-down everything? I couldn’t reached the installation guide at //openstructs.org/doc/code/structwsf/index.html.

Frederick Giasson

March 3, 2017 — 3:48 pm

Hi,

No, check out the new location: http://opensemanticframework.org

Thanks,

Fred