In the last decade, we have seen the emergence of two big families of datasets: the public and the private ones. Invaluable public datasets like Wikipedia, Wikidata, Open Corporates and others have been created and leveraged by organizations world-wide. However, as great as they are, most organization still rely on private datasets of their own curated data.

In this article, I want to demonstrate how high-value private datasets may be integrated into the Cognonto’s KBpedia knowledge base to produce a significant impact on the quality of the results of some machine learning tasks. To demonstrate this impact, I have created a demo that is supported by a “gold standard” of 511 web pages taken at random, to which we have tagged the organization that published the web page. This demo is related to the publisher analysis portion of the Cognonto demo. We will use this gold standard to calculate the performance metrics of the publisher analyzer but more precisely, we will analyze the performance of the analyzer depending on the datasets it has access to perform its predictions.

[extoc]

Cognonto Publisher’s Analyzer

The Cognonto publisher’s analyzer is a portion of the overall Cognonto demo that tries to determine the publisher of a web page from analyzing the web page’s content. There are multiple moving parts to this analyzer, but its general internal workflow works as follows:

- It crawls a given webpage URL

- It extracts the page’s content and extracts its meta-data

- It tags all of the organizations (anything that is considered an organization in KBpedia) across the extracted content using the organization entities that exist in the knowledge base

- It tries to detect unknown entities that will eventually be added to the knowledge base after curation

- It performs an in-depth analysis of the organization entities (known or unknown) that got tagged in the content of the web page, and analyzes which of these is the most likely to be the publisher of the web page.

Such a machine learning system leverages existing algorithms to calculate the likelihood that an organization is the publisher of a web page and to detect unknown organizations. These are conventional uses of these algorithms. What differentiates the Cognonto analyzer is its knowledge base. We leverage Cognonto to detect known organization entities. We use the knowledge in the KB for each of these entities to improve the analysis process. We constrain the analysis to certain types (by inference) of named entities, etc. The special sauce of this entire process is the fully integrated set of datasets that compose the Cognonto knowledge base, and the KBpedia conceptual reference structure composed of roughly ~39,000 reference concepts.

Given the central role of the knowledge base in such an analysis process, we want to have a better idea of the impact of the datasets in the performance of such a system.

For this demo, I use three public datasets already in KBpedia and that are used by the Cognonto demo: Wikipedia (via DBpedia), Freebase and USPTO. Then I add two private datasets of high quality, highly curated and domain-related information augment the listing of potential organizations. What I will do is to run the Cognonto publisher analyzer on each of these 511 web pages. Then I will check which one got properly identified given the gold standard and finally I will calculate different performance metrics to see the impact of including or excluding a certain dataset.

Gold Standard

The gold standard is composed of 511 randomly selected web pages that got crawled and cached. When we run the tests below, the cached version of the HTML pages is used to make sure that we get the same HTML for each page for each test. When the pages are crawled, we execute any possible JavaScript code that the pages may contain before caching the HTML code of the page. That way, if some information in the page was injected by some JavaScript code, then that additional information will be cached as well.

The gold standard is really simple. For each of the URLs we have in the standard, we determine the publishing organization manually. Then once the organization is determined, we search in each dataset to see if the entity is already existing. If it is, then we add the URI (unique identifier) of the entity in the knowledge base into the gold standard. It is this URI reference that is used the determine if the publisher analyzer properly detects the actual publisher of the web page.

We also add a set of 10 web pages manually for which we are sure that no publisher can be determined for the web page. These are the 10 True Negative (see below) instances of the gold standard.

The gold standard also includes the identifier of possible unknown entities that are the publishers of the web pages. These are used to calculate the metrics when considering the unknown entities detected by the system.

Metrics

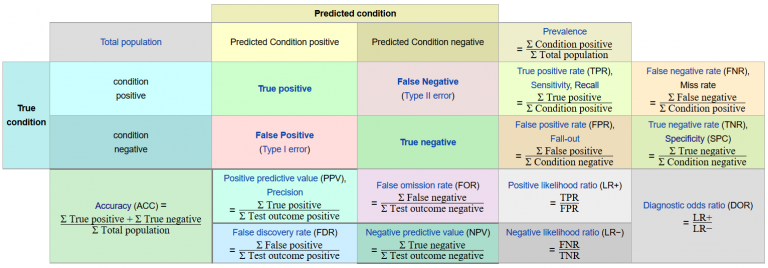

The goal of this analysis is to determine how good the analyzer is to perform the task (detecting the organization that published a web page on the Web). What we have to do is to use a set of metrics that will help us understanding the performance of the system. The metrics calculation is based on the confusion matrix.

The True Positive, False Positive, True Negative and False Negative (see Type I and type II errors for definitions) should be interpreted that way in the context of a named entities recognition task:

True Positive (TP): test identifies the same entity as in the gold standardFalse Positive (FP): test identifies a different entity than what is in the gold standardTrue Negative (TN): test identifies no entity; gold standard has no entityFalse Negative (FN): test identifies no entity, but gold standard has one

The we have a series of metrics that can be used to measure the performance of of the system:

- Precision: is the proportion of properly predicted publishers amongst all of the publishers that exists in the gold standard

(TP / TP + FP) - Recall: is the proportion of properly predicted publishers amongst all the predictions that have been made (good and bad)

(TP / TP + FN) - Accuracy: it is the proportion of correctly classified test instances; the publishers that could be identified by the system, and the ones that couldn’t (the web pages for which no publisher could be identified).

((TP + TN) / (TP + TN + FP + FN)) - f1: the test’s equally weighted combination of precision and recall

- f2: the test’s weighted combination of precision and recall, with a preference for recall

- f0.5: the test’s weighted combination of precision and recall, with a preference for precision.

The F-score test the accuracy of the general prediction system. The F-score is a measure that combines precision and recall is the harmonic mean of precision and recall. The f2 measure weighs recall higher than precision (by placing more emphasis on false negatives), and the f0.5 measure weighs recall lower than precision (by attenuating the influence of false negatives). Cognonto includes all three F-measures in its standard reports to give a general overview of what happens when we put an emphasis on precision or recall.

In general, I think that the metric that gives the best overall performance of this named entities recognition system is the accuracy one. I emphasize those test results below.

Running The Tests

The goal with these tests is to run the gold standard calculation procedure with different datasets that exist in the Cognonto knowledge base to see the impact of including/excluding these datasets on the gold standard metrics.

Baseline: No Dataset

The first step is to create the starting basis that includes no dataset. Then we will add different datasets, and try different combinations, when computing against the gold standard such that we know the impact of each on the metrics.

(table (generate-stats :js :execute :datasets []))

True positives: 2 False positives: 5 True negatives: 19 False negatives: 485 +--------------+--------------+ | key | value | +--------------+--------------+ | :precision | 0.2857143 | | :recall | 0.0041067763 | | :accuracy | 0.04109589 | | :f1 | 0.008097166 | | :f2 | 0.0051150895 | | :f0.5 | 0.019417476 | +--------------+--------------+

One Dataset Only

Now, let’s see the impact of each of the datasets that exist in the knowledge base we created to perform these tests. This will gives us an indicator of the inherent impact of each dataset on the prediction task.

Wikipedia (via DBpedia) Only

Let’s test the impact of adding a single general purpose dataset, the publicly available: Wikipedia (via DBpedia):

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/"]))

True positives: 121 False positives: 57 True negatives: 19 False negatives: 314 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.6797753 | | :recall | 0.27816093 | | :accuracy | 0.2739726 | | :f1 | 0.39477977 | | :f2 | 0.31543276 | | :f0.5 | 0.52746296 | +--------------+------------+

Freebase Only

Now, let’s test the impact of adding another single general purpose dataset, this one the publicly available: Freebase:

(table (generate-stats :js :execute :datasets ["http://rdf.freebase.com/ns/"]))

True positives: 11 False positives: 14 True negatives: 19 False negatives: 467 +--------------+-------------+ | key | value | +--------------+-------------+ | :precision | 0.44 | | :recall | 0.023012552 | | :accuracy | 0.058708414 | | :f1 | 0.043737575 | | :f2 | 0.028394425 | | :f0.5 | 0.09515571 | +--------------+-------------+

USPTO Only

Now, let’s test the impact of adding still a different publicly available specialized dataset: USPTO:

(table (generate-stats :js :execute :datasets ["http://www.uspto.gov"]))

True positives: 6 False positives: 13 True negatives: 19 False negatives: 473 +--------------+-------------+ | key | value | +--------------+-------------+ | :precision | 0.31578946 | | :recall | 0.012526096 | | :accuracy | 0.04892368 | | :f1 | 0.024096385 | | :f2 | 0.015503876 | | :f0.5 | 0.054054055 | +--------------+-------------+

Private Dataset #1

Now, let’s test the first private dataset:

(table (generate-stats :js :execute :datasets ["http://cognonto.com/datasets/private/1/"]))

True positives: 231 False positives: 109 True negatives: 19 False negatives: 152 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.67941177 | | :recall | 0.60313314 | | :accuracy | 0.4892368 | | :f1 | 0.6390042 | | :f2 | 0.61698717 | | :f0.5 | 0.6626506 | +--------------+------------+

Private Dataset #2

And, then, the second private dataset:

(table (generate-stats :js :execute :datasets ["http://cognonto.com/datasets/private/2/"]))

True positives: 24 False positives: 21 True negatives: 19 False negatives: 447 +--------------+-------------+ | key | value | +--------------+-------------+ | :precision | 0.53333336 | | :recall | 0.050955415 | | :accuracy | 0.08414873 | | :f1 | 0.093023255 | | :f2 | 0.0622084 | | :f0.5 | 0.1843318 | +--------------+-------------+

Combined Datasets – Public Only

A more realistic analysis is to use a combination of datasets. Let’s see what happens to the performance metrics if we start combining public datasets.

Wikipedia + Freebase

First, let’s start by combining Wikipedia and Freebase.

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/" "http://rdf.freebase.com/ns/"]))

True positives: 126 False positives: 60 True negatives: 19 False negatives: 306 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.67741936 | | :recall | 0.29166666 | | :accuracy | 0.28375733 | | :f1 | 0.407767 | | :f2 | 0.3291536 | | :f0.5 | 0.53571427 | +--------------+------------+

Adding the Freebase dataset to the DBpedia one had the following effects on the different metrics:

| metric | Impact in % |

|---|---|

| precision | -0.03% |

| recall | +4.85% |

| accuracy | +3.57% |

| f1 | +3.29% |

| f2 | +4.34% |

| f0.5 | +1.57% |

As we can see, the impact of adding Freebase to the knowledge base is positive even if not ground breaking considering the size of the dataset.

Wikipedia + USPTO

Let’s switch Freebase for the other specialized public dataset, USPTO.

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/" "http://www.uspto.gov"]))

True positives: 122 False positives: 59 True negatives: 19 False negatives: 311 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.67403316 | | :recall | 0.2817552 | | :accuracy | 0.27592954 | | :f1 | 0.39739415 | | :f2 | 0.31887087 | | :f0.5 | 0.52722555 | +--------------+------------+

Adding the USPTO dataset to the DBpedia instead of Freebase had the following effects on the different metrics:

| metric | Impact in % |

|---|---|

| precision | -0.83% |

| recall | +1.29% |

| accuracy | +0.73% |

| f1 | +0.65% |

| f2 | +1.07% |

| f0.5 | +0.03% |

As we may have expected the gains are smaller than Freebase. Maybe partly because it is smaller and more specialized. Because it is more specialized (enterprises that have patents registered in US), maybe the gold standard doesn’t represent well the organizations belonging to this dataset. But in any case, these are still gains.

Wikipedia + Freebase + USPTO

Let’s continue and now include all three datasets.

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/" "http://www.uspto.gov" "http://rdf.freebase.com/ns/"]))

True positives: 127 False positives: 62 True negatives: 19 False negatives: 303 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.6719577 | | :recall | 0.29534882 | | :accuracy | 0.2857143 | | :f1 | 0.41033927 | | :f2 | 0.3326349 | | :f0.5 | 0.53541315 | +--------------+------------+

Now let’s see the impact of adding both Freebase and USPTO to the Wikipedia dataset:

| metric | Impact in % |

|---|---|

| precision | +1.14% |

| recall | +6.18% |

| accuracy | +4.30% |

| f1 | +3.95% |

| f2 | +5.45% |

| f0.5 | +1.51% |

Now let’s see the impact of using highly curated, domain related, private datasets.

Combined Datasets – Public enhanced with private datasets

The next step is to add the private datasets of highly curated data that are specific to the domain of identifying web page publisher organizations. As the baseline, we will use the three public datasets: Wikipedia, Freebase and USPTO and then we will add the private datasets.

Wikipedia + Freebase + USPTO + PD #1

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/" "http://www.uspto.gov" "http://rdf.freebase.com/ns/" "http://cognonto.com/datasets/private/1/"]))

True positives: 279 False positives: 102 True negatives: 19 False negatives: 111 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.7322835 | | :recall | 0.7153846 | | :accuracy | 0.58317024 | | :f1 | 0.7237354 | | :f2 | 0.7187017 | | :f0.5 | 0.7288401 | +--------------+------------+

Now, let’s see the impact of adding the private dataset #1 along with Wikipedia, Freebase and USPTO:

| metric | Impact in % |

|---|---|

| precision | +8.97% |

| recall | +142.22% |

| accuracy | +104.09% |

| f1 | +76.38% |

| f2 | +116.08% |

| f0.5 | +36.12% |

Adding the highly curated and domain specific private dataset #1 had a dramatic impact on all the metrics of the combined public datasets. Now let’s see what is the impact of the public datasets on the private dataset #1 metrics when it is used alone:

| metric | Impact in % |

|---|---|

| precision | +7.77% |

| recall | +18.60% |

| accuracy | +19.19% |

| f1 | +13.25% |

| f2 | +16.50% |

| f0.5 | +9.99% |

As we can see, the public datasets does significantly increase the performance of the highly curated and domain specific private dataset #1.

Wikipedia + Freebase + USPTO + PD #2

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/" "http://www.uspto.gov" "http://rdf.freebase.com/ns/" "http://cognonto.com/datasets/private/2/"]))

True positives: 138 False positives: 69 True negatives: 19 False negatives: 285 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.6666667 | | :recall | 0.32624114 | | :accuracy | 0.3072407 | | :f1 | 0.43809524 | | :f2 | 0.36334914 | | :f0.5 | 0.55155873 | +--------------+------------+

Not all of the private datasets have equivalent impact. Let’s see the impact of adding the private dataset #2 instead of the #1:

| metric | Impact in % |

|---|---|

| precision | -0.78% |

| recall | +10.46% |

| accuracy | +7.52% |

| f1 | +6.75% |

| f2 | +9.23% |

| f0.5 | +3.00% |

Wikipedia + Freebase + USPTO + PD #1 + PD #2

Now let’s see what happens when we use all the public and private datasets.

(table (generate-stats :js :execute :datasets ["http://dbpedia.org/resource/" "http://www.uspto.gov" "http://rdf.freebase.com/ns/" "http://cognonto.com/datasets/private/1/" "http://cognonto.com/datasets/private/2/"]))

True positives: 285 False positives: 102 True negatives: 19 False negatives: 105 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.7364341 | | :recall | 0.7307692 | | :accuracy | 0.59491193 | | :f1 | 0.7335907 | | :f2 | 0.7318952 | | :f0.5 | 0.7352941 | +--------------+------------+

Let’s see the impact of adding the private datasets #1 and #2 to the public datasets:

| metric | Impact in % |

|---|---|

| precision | +9.60% |

| recall | +147.44% |

| accuracy | +108.22% |

| f1 | +78.77% |

| f2 | +120.02% |

| f0.5 | +37.31% |

Adding Unknown Entities Tagger

There is one last feature with the Cognonto publisher analyzer: it is possible for it to identify unknown entities from the web page. (An “unknown entity” is identified as a likely organization entity, but which does not already exist in the KB.) Sometimes, it is the unknown entity that is the publisher of the web page.

(table (generate-stats :js :execute :datasets :all))

True positives: 345 False positives: 104 True negatives: 19 False negatives: 43 +--------------+------------+ | key | value | +--------------+------------+ | :precision | 0.76837415 | | :recall | 0.88917524 | | :accuracy | 0.7123288 | | :f1 | 0.82437277 | | :f2 | 0.86206895 | | :f0.5 | 0.78983516 | +--------------+------------+

As we can see, the overall accuracy improved by 19.73% when considering the unknown entities compared to the public and private datasets.

| metric | Impact in % |

|---|---|

| precision | +4.33% |

| recall | +21.67% |

| accuracy | +19.73% |

| f1 | +12.37% |

| f2 | +17.79% |

| f0.5 | +7.42% |

Analysis

When we first tested the system with single datasets, some of them were scoring better than others for most of the metrics. However, does that mean that we could only use them and be done with it? No, what this analysis is telling us is that some datasets score better for this set of web pages. They cover more entities found in those web pages. However, even if a dataset was scoring lower it does not mean it is useless. In fact, that worse dataset may in fact cover one prediction area not covered in a better one, which means that by combining the two, we could improve the general prediction power of the system. This is what we can see by adding the private datasets to the public ones.

Even if the highly curated and domain-specific private datasets score much better than the more general public datasets, the system still greatly benefits from the contribution of the public dataset by significantly improving the accuracy of the system. We got a gain 19.19% in accuracy by adding the public datasets to the better scoring private dataset #1. Nearly 20% of improvement in such a predictive system is highly significant.

Another thing that this series of tests tends to demonstrate is that the more knowledge we have, the more we can improve the accuracy of the system. Adding datasets doesn’t appear to lower the overall performance of the system (even if I am sure that some could), but generally the more the better (but more doesn’t necessarely produce significant accuracy increases).

Finally, adding a feature to the system can also greatly improve its overall accuracy. In this case, we added the feature of detecting unknown entities (organization entities that are not existing in the datasets that compose the knowledge base), which improved the overall accuracy by another 19.73%. How is that possible? To understand this we have to consider the domain: random web pages that exist on the Web. A web page can be published by anybody and any organization. This means that the long tail of web page publisher is probably pretty long. Considering this fact, it is normal that existing knowledge bases may not contain all of the obscure organizations that publish web pages. It is most likely why having a system that can detect and predict unknown entities as the publishers of web page will have a significant impact on the overall accuracy of the system. The flagging of such “unknown” entities tells us where to focus efforts to add to the known database of existing publishers.

Conclusion

As we saw in this analysis, adding high quality and domain-specific private datasets can greatly improve the accuracy of such a prediction system. Some datasets may have a more significan impact than others, but overall, each dataset contribute to the overall improvement of the predictions.

flovera1

December 27, 2016 — 7:05 am

What programming language is this ? it looks like jquery, but you dont say…

flovera1

December 27, 2016 — 7:27 am

and this is also not your work, you stole it from here:

http://cognonto.com/use-cases/benefits-from-extending-kbpedia-with-private-datasets/

Does “All Rights Reserved” words mean sth for you?

Frederick Giasson

December 27, 2016 — 9:08 am

Hi @flovera1,

The programming language that I am using in these use cases is called Clojure. It is a Lisp running on the JVM.

Fortunately it is my work. I am the CTO of Cognonto. All the use cases have been first published on my blog and then re-edited for the Cognonto.com website. Since we are a really small outfit, Mike and I are both publishing these usecases on multiple platform to reach different audiences (our blogs are more than 16 years old so reaching out this audience is an important part of our Cognonto maketing).

In any case, thanks for taking this seriously and to take action when you see such apparent copying of copyrighted work.

Take care,

Fred

flovera1

December 29, 2016 — 12:21 am

hey man thx for everything

its a good post