For few months now at Structured Dynamics we have been developing what we call “Semantic Components“. A semantic component is an ontology-driven component, or widget, based on Flex. Such a component takes record descriptions, ontologies and target attributes/types as inputs and outputs some (possibly interactive) visualizations of the records. Depending on the logic described in the input schema and the input records descriptions, the semantic component may behave differently to optimize its own layout/behavior to users.

The purpose of these semantic components is to have a framework of adaptive user interfaces that can be plugged directly to structWSF Web service endpoint instances. The goal is to plug some data, schema and target attributes into these components, and then to let them change their behaviors and appearances depending on the input data and schema.

The picture is simple. We tell the components: here is a set of records serialized in structXML, here is a set of schema serialized in irXML, and here are the target attributes and types I want the components to display. Then, different components get selected and behave differently depending on how the schema have been defined, and how the records have been described.

Ultimately, development time is saved because developers don’t have to hard-code the appearance and the behavior of the user interfaces depending on the data and schema that the user interface was receiving at a certain point in time: the logic is built-in to the components.

Overall Workflow

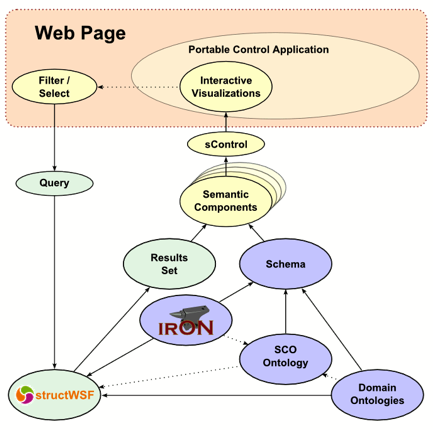

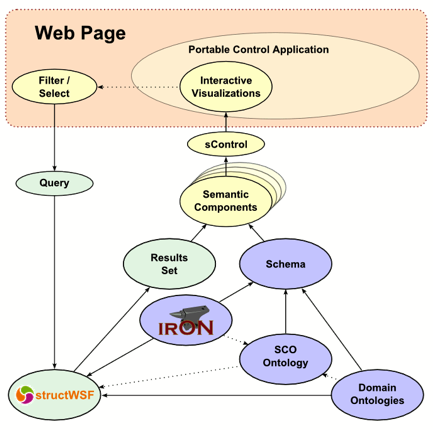

These various semantic components get embedded in a layout canvas. By interacting with the various components, new queries are generated (most often as SPARQL queries) to the various structWSF Web services endpoints. The result of these requests is to generate a structured results set, which includes various types and attributes.

An internal ontology that embodies the desired behavior and display options (SCO, the Semantic Component Ontology) is matched with these types and attributes to generate the formal instructions to the semantic components. These instructions are presented via the sControl component, that determines which widgets (individual components) needs to be invoked and displayed on the layout canvas.

New interactions with the resulting displays and components cause the iteration path to be generated anew, again starting a new cycle of queries and results sets.

As these pathways and associated display components get created, they can be named and made persistent for later re-use or within dashboard invocations.

A Shift in Design Perspective

There is a bit of a user interface design shift here. User interfaces have always been developed to present information (data) to users, and to let them interact with it. When someone develops such an interface, he has to make thousands of decisions to enable the user interface to cope with different data description situations. Our semantic component framework tries to remove some of this burden on the shoulders of the designer so that it takes these decisions itself. Such decisions are in the range of:

- The text control X displays the value of an attribute Y. If the attribute Y doesn’t exist in the description of a record A, then we have to remove it from the user interface.

- Note: if the text control X gets removed from the interface, there is a good chance that we may have to change other controls as well so that the user interface remains usable to the users.

- If the text control X gets removed, then there is no reason why its associated icon image should remain in the user interface, so let’s provide accommodations to remove it as well.

- Some attributes describing the records have values that are comparable with related attributes, so let’s compare these values in a linear chart

- Some records may be useful baselines for comparison with other records, so let’s allow that to be externally specified, too.

- All these decisions are true for record A, but not for record B since we have a value to display for the text control X, so let’s behave differently by displaying the text control X and its associated icon image.

- Etc.

All of these kinds of decisions are now made by the semantic components within our new framework depending on how the input records are described and what ontologies (schema) drive the system.

Thus, the designer can now put more time and effort on the questions of general layout and behavior, themes and styles for her applications, without caring much about how to display information for specific records descriptions.

Perhaps most significantly is that the behavior and presentation of information can now be described within these records and schema, an activity that users and knowledge workers can do directly, thus bypassing the need for IT and development. A new balance gets established: developers focus on creating generic tools (widgets or components); consumers of data (users and knowledge workers) determine how they want to display and compare their information.

Unbelievably Fast Implementation

While this shift or change may appear on its face to require some big new framework, the fact is we have been able to accomplish this with simple approaches leading to simple outcomes. Structured Dynamics has been able to put in place a complete Web portal of integrated data that publish all its data in several serialization languages, with many utilities by which users can interact with the data, slice and dice it, visualize it, and filter and manipulated it … and all of this in within two weeks of effort for one developer!

One good example of this is the Citizen Dan demo, composed of Census data and stories related to the Iowa City Metropolitan Area that Mike presented at SemTech 2010 (and some screenshots).

Oh, and did I mention? This system handles text, images, tags, maps, dashboards, numeric data and any kind of structure you can throw at it. And all with the same set of generic components (to which we and others are adding).

More Information

Here is some more information about the semantic component framework and its related pieces:

This is an alpha version of the library. We would also welcome any contributor to the project! We hope you like what you see and that you will be able to leverage it the way we did so that you, and your team, can save as much time as we did!

Structured Dynamics just released the Citizen DAN demo. This is the sum of nearly two years of efforts in developing different pieces of technologies such as structWSF, conStruct, irON and Semantic Components. Citizen DAN is the first OSF (Open Semantic Framework) instance.

Structured Dynamics just released the Citizen DAN demo. This is the sum of nearly two years of efforts in developing different pieces of technologies such as structWSF, conStruct, irON and Semantic Components. Citizen DAN is the first OSF (Open Semantic Framework) instance.

Today we released a simple structWSF nodes statistics report. It aggregates different statistics from all know (and accessible) structWSF nodes on the Web. It is still in its early stage, but aggregated statistics so far are quite interesting.

Today we released a simple structWSF nodes statistics report. It aggregates different statistics from all know (and accessible) structWSF nodes on the Web. It is still in its early stage, but aggregated statistics so far are quite interesting.