|

Pinging and receiving list of newly created and updated RDF resources has never been easier! I am pleased to announce the release of the latest version of Ping the Semantic Web. |

In this brand new system you have access to a:

- Validated RDF resources

- Simplified pings list export system

- Faster pinging infrastructure

- Brand new user interface

- New statistics

1. Validated RDF resources

In the version 2.0, PTSW was doing a pseudo validation of RDF files. In the version 3.0, it fully validates RDF documents. This means that all pings the service export are valid RDF documents.

This is a major upgrade to the system since now all agents requesting pings from PTSW will know that each of them are valid RDF documents. That way, they will save time and bandwidth since they won’t try to process bad RDF documents.

2. Simplified pings list export system

Now all ping consumers need to be registered to the PTSW web service. This simple registration greatly helps consuming pings coming from PTSW. There are the steps to follow to get pings from the server:

- The user have to register an account on pingthesemanticweb.com

- He has to register the IP address of the server that will download the xml file listing all the latest pings received from the system

- Additionally, he has to setup his pings retrieval preferences in the user account section.

- The registered web server has to request the xml file at: http://pingthesemanticweb.com/export/

What improved is the way applications get pings. Now a web server only has to request the xml file, and PTSW will take care to created the xml file according to the user’s preferences.

Finally, PTSW is archiving the time of the latest request of the user. Next time the user’s server will request this document, it will receive the xml file with all the pings received by PTSW since its last request.

This is a major improvement since if the user’s web server was down for 2 days, for some reason, it won’t lose any pings since PTSW will send him all the pings received by the service in the last 2 days.

Note: all current Ping the Semantic Web ping consumers have to create an account and change their application accordingly.

3. Faster pinging infrastructure

The web service is now hosted on a much bigger server. We also switched from MySQL to Virtuoso. These changes result in a more powerful service that I estimate to be able to handle up to 5 million pings per day (in the best of the World with fast remote web server delivering the RDF content). In any case, it is probably enough for the next year’s expansion.

4. Brand new user interface

We also spent some time refreshing the user interface of the web service. This new interface will help us to easily integrate new features and sections to the service’s web site along with keeping it appealing to users.

5. New statistics

New statistics on the state of the service are now available.

- All stats about Namespaces. This is the list of namespaces used to describe entities in RDF. For example, if a RDF document has an entity types as a sioc:Post, then the SIOC namespace will be added and its stat counter will be incremented by one. There is currently 347 used namespaces know by PTSW.

- All stats about Types. This is the number of typed entities defined in each RDF document know by PTSW. For example, if a RDF document has four foaf:Person defined, then four will be added to the counter. If the same entity (URI) is defined in two different RDF documents, the type of the entity will be calculated twice. So take these numbers as a good approximation, but not as an absolute truth. There is currently 2773 types know by PTSW.

Some people will notice that the current numbers in the sidebar are completely different from the ones that were on the old website of the service. They are right, and there is the reason: I pruned the geonames.org and talkdigger.com pings from PTSW.

In fact, when I started the web service, I added these two RDF data dumps to PTSW. At that time, initiatives such as the Linking Open Data Community didn’t exist and people didn’t know how to export their RDF data dumps. So I choose to include them in the PTSW system. Since then, methods improved and things changed. Now RDF data dumps are available directly from these web sites, data dump repository exists, and people don’t use PTSW for that reason. In fact, they use the PTSW exportation feature to synch their service, and not to get complete datasets from them. This said, I pruned all these 7 000 000 documents from the system leaving about 845 000 “wild” RDF documents in the system.

It is the inclusion of these complete data sources that were increasing the stats compared to today’s stats.

Conclusion

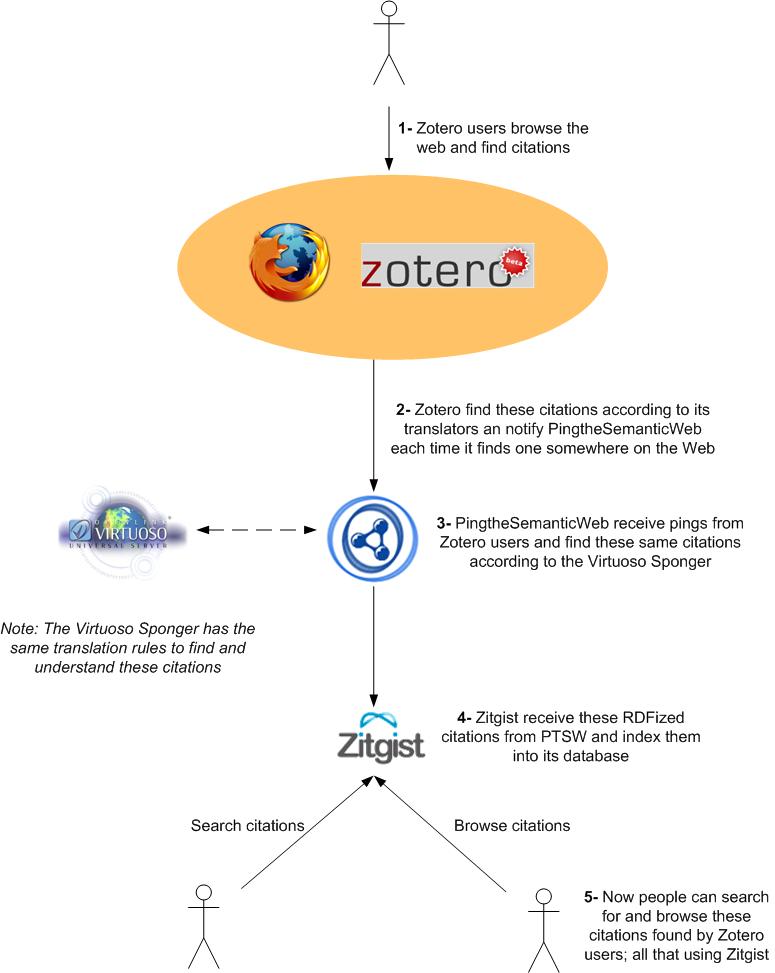

When I created Ping the Semantic Web more than one year ago, I hoped developers would use the service to easily find RDF data without crawling the entire. I hoped that this web service would be a vector of semantic web application development. I think that it succeeded in some ways when I think about services such as SIndice and DOAPStore that emerged from the PTSW initiative.

This new version of Ping the Semantic Web tries to go further in that directing: making thinks even simpler for RDF data consumer and giving them a more powerful RDF discovery service.

Note: make sure to refresh the DNS cache of your desktops and servers so that you see the new, and not the old, PTSW web site.

Eight months ago we announced the dissolution of Zitgist LLC. This event led to the creation of a “sandbox“ to keep alive all the online assets of the company. Since this sandbox server was not owned by Structured Dynamics, it was becoming hard for us to update UMBEL and its online services. It is why we took the time to move the services back on to our new servers.

Eight months ago we announced the dissolution of Zitgist LLC. This event led to the creation of a “sandbox“ to keep alive all the online assets of the company. Since this sandbox server was not owned by Structured Dynamics, it was becoming hard for us to update UMBEL and its online services. It is why we took the time to move the services back on to our new servers. Structured Dynamics LLC now hosts a new version for the UMBEL Web services. From the main menu at the SD Web site you can access these services under the “umbel ws” menu option (you can also bookmark the Web services site at umbel.structureddynamics.com or ws.umbel.org.)

Structured Dynamics LLC now hosts a new version for the UMBEL Web services. From the main menu at the SD Web site you can access these services under the “umbel ws” menu option (you can also bookmark the Web services site at umbel.structureddynamics.com or ws.umbel.org.) Additionally, the Ping the Semantic Web RDF pinging service is now the property of OpenLink Software Inc. OpenLink is now hosting, maintaining and developing the service.

Additionally, the Ping the Semantic Web RDF pinging service is now the property of OpenLink Software Inc. OpenLink is now hosting, maintaining and developing the service.