I am happy to announce the immediate availability of a brand new UMBEL website and a new set of eight UMBEL web services.

I am happy to announce the immediate availability of a brand new UMBEL website and a new set of eight UMBEL web services.

UMBEL (Upper Mapping and Binding Exchange Layer) is a general reference structure of 28,000 concepts, which provides a scaffolding to link and interoperate other datasets and domain vocabularies. This project is now six years old.

I would recommend that your read Mike’s blog post about this new release if you want more background information about UMBEL and to have a better understanding of how it can help you integrate, manage, publish and reason over your data.

In this blog post, I will focus on the technical aspects of this new web site and the new set of web service endpoints.

Toward a Better Web Experience

The Web is changing fast. Techniques for developing web sites are constantly and quickly evolving. People uses all kind of devices with different sizes of screens to consume Web content. Websites are more and more responsive by their clever architecture design, and their simpler user interfaces. This is the kind of website we wanted to create for the new UMBEL website.

Clojure Web Service Endpoints at the Core

The core of the new UMBEL website are the new web services. As soon as you are performing a search, or looking at the description of a reference concept or a super type, your browser is making a series of asynchronous queries to the UMBEL web service endpoints.

The average query time is about 60 milliseconds for any of the web service query. This means that a web page is fully loaded within 300 to 500 milliseconds where most of the time is spent downloading the web files (the JavaScript, CSS, HTML and image files) and not querying the web service endpoints. Bearing in mind that the website currently run on a small server with a single core and 1.8G of RAM, these are really good performance figures.

We are initially releasing 8 web service endpoints (with more to follow). They have been created to help developers quickly start using the reference structure without having to download and deploy the entire structure on their own infrastructure. The 8 web services are:

Search conceptGet conceptGet super typeGet narrower conceptsGet broader conceptsGet sub-classesGet super-classesDegree

All these web services are calculating the results at runtime. For example, if you want to find the degree between two reference concepts, then the degree is calculated at runtime. It is the same for all the web services that does inferencing like the Get narrower concepts or Get broader concepts web service endpoints.

What we did to get these excellent performance measures is to use Clojure as the programming language and framework to develop the new web service endpoints. Then we define the UMBEL structure as Clojure code.

Each web service endpoint is comprised of simple pure functions that perform calculations on the UMBEL graph of 28 000 nodes. None of the functions are more than 30 lines of code (per endpoint) which greatly simplifies their creation, debugging, maintenance and optimization. Then we use contributed libraries such as Ring and Compojure to manage the creation of the web service endpoints, and Clucy/Lucene for the search engine.

The web services can easily be scaled horizontally since everything is self contained in a single WAR file that can be deployed on new servers in a few clicks. Then the new servers can participate into a cluster of UMBEL web service servers.

Another advantage of using this technology stack for creating the UMBEL web service endpoints is that UMBEL is not just a reference structure nor a set of web service endpoints. It is also a programming API that could be used in any Clojure or Java applications. The UMBEL reference structure, along with all the functions that uses it will be available as a JAR file. That way, UMBEL become portable. It could be used as a library in any JVM application without requiring it to send queries to external web services, or to create complex stacks to deploy and use the UMBEL reference structure in different applications.

Bootstrap as the HTML/CSS/JavaScript Framework

The previous UMBEL website was using Drupal 6. For the ones that were using it, it was sometimes clunky, less responsive and more heavy weight. The problem is that we were not requiring a full CMS system for developing a simple UMBEL website that is only informational.

We wanted a responsive experience for the UMBEL user. We wanted to have the fastest experience possible and we wanted to have this experience on any kind of device: desktop computers, tables, mobile phones, etc.

This is why we choose to develop the new UMBEL website using Twitter’s Bootstrap HTML, CSS and JavaScript framework. This is a framework that anybody can use to quickly create simple, beautiful and modern websites. It uses a grid system to create responsive user interfaces on any kind of device (screen size). That way, UMBEL users have the same kind of experience whether they are using a normal desktop screen, a tablet of their mobile phone.

This choice enabled us to create a simple, modern, nice looking and responsive website for UMBEL.

Introduction to the UMBEL Web Services

Now let’s take the time to introduce each of the UMBEL web service endpoint. The first thing to know is that the UMBEL web service endpoints are free to use, have no usage limits and there is no throttling.

Search Concept Web Service

The Search Web service is used to find UMBEL reference concepts that match a search string. This is the primary tool for finding available concepts in the reference structure. It supports the Lucene query syntax and search queries can be constrained on different fields like the preferred label, alternative labels, descriptions and URI.

Get Concept Web Service

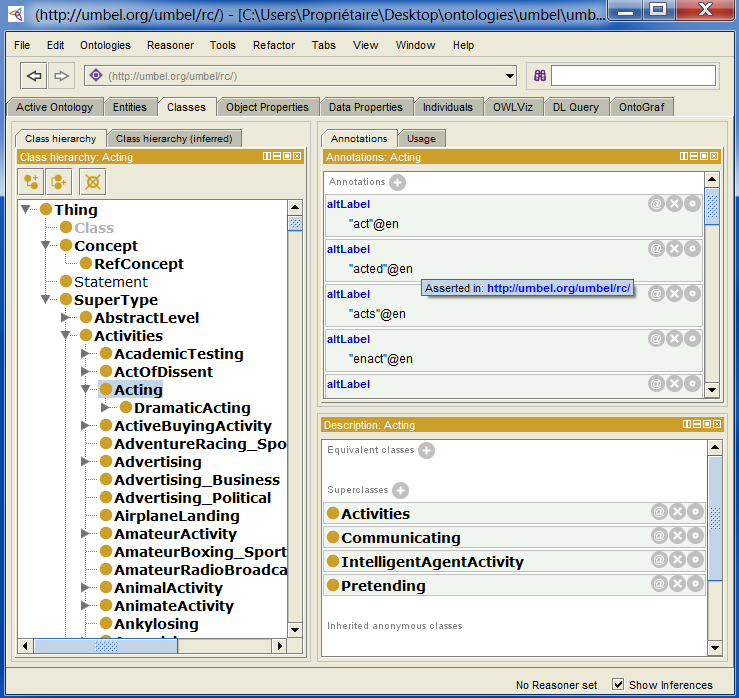

The Get Concept Web service is used to get the full description of a UMBEL Reference Concept. By querying this Web service endpoint, you will get the preferred label, all the alternative labels (namely, the items in the semset), the sub/super classes of the concept, the broader/narrower concepts and the description of that concept.

This is the Web service endpoint that should be used to get the direct relationships with any other reference concept.

Reference concepts descriptions are available as N-Triples, RDF+XML, structJSON or Clojure code.

Get Super Type Web Service

The Get Super Type Web service is used to get the full description of a UMBEL Super Type. By querying this Web service endpoint, you will get the preferred label, all of the alternative labels, the description, and the disjoint super types of a target super type.

Get Narrower Concept Web Service

The Get Narrower Concept Web service is used to get the list of all the narrower concepts of a given reference concept. This processing is done by inference, which means that if A -> B -> C are narrower concepts, then the narrower concepts of A are both B and C, which is what will be returned by the endpoint.

Get Broader Concept Web Service

The Get Broader Concept Web service is used to get the list of all the broader concepts for a given reference concept. This processing is done by inference, which means that if A -> B -> C are broader concepts, then the broader concepts of C are both A and B, which is thus what will be returned by the endpoint.

The broader reference concepts do not include the super type as their top concept (use the Get Super-Class-Of web service endpoint for that).

Get Sub Classes Web Service

The Get Sub Classes Web service is used to get the list of all the sub classes of a given reference concept. This processing is done by inference, which means that if A -> B -> C are sub classes, then the sub classes of A are both B and C, which is what will be returned by the endpoint.

Get Super Classes Web Service

The Get Super Classes Web service is used to get the list of all the super classes of a given reference concept. This processing is done by inference, which means that if A -> B -> C are super classes, then the super classes of C are both A and B, which is what will be returned by the endpoint.

The super classes do include the super types as their top concept (use the Get Super-Class-Of web service endpoint for that).

Degree Web Service

The Degree Web service is used to get the degree (measure of distance) between two UMBEL reference concepts by following the path of a transitive property.

Conclusion

This new website along with these new web service endpoints are still using the UMBEL reference structure version 1.05. However, in the coming month or two, a new version of the reference structure should be released. The structure itself won’t change much except the introduction of a few new reference concepts. But new mechanisms (mostly related to attributes) will be introduced. It will also come with a brand new mapping with external data schemas and data sources such as Schema.org, Wikipedia, etc.

On my side, I will start writing more about UMBEL. New web service endpoints will be released over time. The API available to use, manage and leverage the structure will constantly expand.

On the other side, I will write about how the UMBEL reference structure can be used, how it can be leveraged to integrate data sources, to expend search queries, etc.

Sometime this week I was reading a

Sometime this week I was reading a

Eight months ago we announced the dissolution of Zitgist LLC. This event led to the creation of a

Eight months ago we announced the dissolution of Zitgist LLC. This event led to the creation of a  Structured Dynamics LLC now hosts a new version for the UMBEL Web services. From the main menu at the

Structured Dynamics LLC now hosts a new version for the UMBEL Web services. From the main menu at the  Additionally, the

Additionally, the