As I said in my previous blog post, a conStruct instance is nothing more than a skin for one or multiple structWSF instances. conStruct is a user of a structWSF network.

But… what that means?

That means that each conStruct tools communicate with one or multiple structWSF instances. Each each feature of conStruct comes from structWSF. The only thing it does is presenting information to users, and give them some tool to manipulate the data.

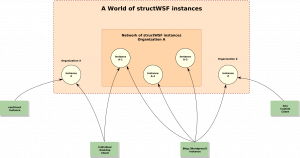

A structWSF instances network

A structWSF instance is a set of web service endpoints. Each endpoint gets registered in a network. Each query sent to any of the web service endpoint of the network gets authenticated (and possibly rejected) by the network.

All structWSF instances share the same basic web services endpoints, however some specialized structWSF instance can add new functionality to the framework by developing new endpoints that does special things. Others can un-register services that has nothing to do with the mission of the instance, etc.

Not all structWSF instances are the same, but all of them share the same interface.

Individual people or organizations can choose to create structWSF nodes. The purposes can be quite different. Some organizations could choose to create structWSF nodes for internal purposes only: to help their departments to share different kind of data for example. Some people could want to setup a structWSF node where they can archive and share all data specific to their hobbies. Whatever the use-case is: they want a platform to ingest, manage, interact with and publish data; publicly or privately.

In the schema above, we can notice that different structWSF instances have been created and are maintained by different organizations, for different purposes. Some of the clients will communicate with these structWSF instances as a public user of the datasets published on the node(s), and other users will access to datasets that only them have access to.

As you can see, some users communicate with multiple structWSF instances. This means that these user cares about data of different datasets, maintained by different organizations. Why and what for? We don’t know. It can be for any reasons. It can be as a web portal that aggregates all the information about a specific domain that is shared amongst multiple nodes or it can be because the user get information from his client’s networks to get things done.

What is important to keep in mind with the schema above is that any kind of people, any kind of organizations and any kind of systems can leverage the structured data they have access to that is hosted by different organizations that make available different datasets and different web services endpoints (maybe some organizations can even create a web service endpoint that works with their dataset and to expose some special algorithms they use to disambiguate/tag entities, etc.)

A network in action

You are probably telling yourself: well, the grand vision is good… but where is the meat around the bone?

Lets take a look at the conStructSCS sandbox demo. You have two datasets in there: (1) the Sweet Tools and (2) RePEc. There is one thing that you probably don’t notice: both datasets live on two different structWSF instances (each structWSF instance is hosted on a different web server). This means that if you perform a search, or a browse query, all results you get in the conStruct user interface come from two totally different servers, with different data maintainers, hosted by different organizations, etc. Still, all results are displayed in the same user interface, which is the conStructSCS demo sandbox.

Under the curtain

Lets take a look at what is happening. First, run this search query for “rdf”. You see what appears in the yellow box? This is a list of the queries exchanged between conStruct and two structWSF instances. You want more? Try this other search query for “rdf”. Now you also have access to the body of the messages.

For this demo sandbox, we enabled the “wsf_debug” parameter so that users of the sandbox can see how a conStruct node can interact with structWSF instances. If the value of this URL parameter is “1”, then the header + body of the query is displayed to the users. If the value is “2”, only the header is displayed.

This means that you can happen the “&wsf_debug=1” parameter to any URL of the demo sandbox and you will be able to see the messages exchanged between the systems. Why? Because all conStruct tools communicate with one or multiple web service endpoint(s) and one or multiple structWSF instances.

Now, lets take a look at the output of the search query above.

- Web service query: [[url: http://localhost/ws/search/] [method: post] [mime: text/xml] [parameters: ] [execution time: 0.279745101929]] (status: 200) OK – .

- Web service query: [[url: http://bknetwork.org/ws/search/] [method: post] [mime: text/xml] [parameters: query=rdf&types=all&datasets=http%3A%2F%2Fbknetwork.org%2Fwsf%2Fdatasets%2F283%2F%3Bhttp%3A%2F%2Fconstructscs.com%2Fwsf%2Fdatasets%2F160%2F&items=10&page=0&inference=on&include_aggregates=true®istered_ip=self%3A%3A0] [execution time: 0.289397001266]] (status: 200) OK – .

- Web service query: [[url: http://localhost/ws/dataset/read/] [method: get] [mime: text/xml] [parameters: uri=all®istered_ip=self%3A%3A0] [execution time: 0.123399972916]] (status: 200) OK – .

- Web service query: [[url: /ws/dataset/read/] [method: get] [mime: text/xml] [parameters: uri=all®istered_ip=self%3A%3A0] [execution time: 0.18315911293]] (status: 200) OK – .

Each dot is a query sent to a specific structWSF instance. For each query, you have this information:

- URL of the web service endpoint where the query has been sent.

- HTTP method used to send the query

- MIME type (Accept HTTP header parameters) requested

- Parameters of the query

- Time it took to execute the query (including network latency & query processing)

- Status of the query from the web service endpoint

Since this conStruct instance is linked to two different structWSF instances, the search tool will send a search query to two different search web service endpoints. Additionally, it will query these structWSF instances to get the description of the searched dataset (to display the proper name of the datasets in the user interface).

Each query is validated by the structWSF instances to make sure that they are legitimate queries. If they are, then results are returned. Once these queries are sent and answers received, the structSearch tool can then generate the page and display it to the user.

Do you want more? Here is a list of queries sent by different conStruct tools to different web services endpoints:

(Note: this debug info tabs has been added so that people can see what is happening under the hood. However this information is only accessible to the registered conStruct instance and the administrator of that instance).

Do it by yourself, from your desktop computer

I said that people or organizations that managed to create content data on these structWSF instances were able to manage/manipulate their data from anywhere: not only from within conStruct. Lets test this.

I changed the permissions on the Sweet Tools List dataset so that it is publicly available for reading. That way, any anyone will be able to send Curl queries against the dataset, to that structWSF instance.

Now, lets try a couple of queries to different web services endpoints. Let start with a query for the keyword “rdf” on the Sweet Tools dataset:

curl -H “Accept: text/xml” “http://constructscs.com/ws/search/” -d “query=rdf&types=all&datasets=http%3A%2F%2Fconstructscs.com%2Fwsf%2Fdatasets%2F122%2F&items=10&inference=on”

What you will get for this query is a list of 10 instance records that match this query. You don’t like the internal XML representation of the system? Then try the internal JSON representation by running this query:

Maybe this is not good enough for you? Then lets try in RDF+XML:

curl -H “Accept: application/rdf+xml” “http://constructscs.com/ws/search/” -d “query=rdf&types=all&datasets=http%3A%2F%2Fconstructscs.com%2Fwsf%2Fdatasets%2F122%2F&items=10&inference=on”

I think you understood the point here, so I won’t continue.

Now, lets send a query to get all the datasets accessible by you:

curl -H “Accept: application/rdf+xml” “http://constructscs.com/ws/auth/lister/” -d “mode=adataset”

If you can query all these things with Curl, this mean that anything can query these services. Standalone softwares can be developed to leverage these content nodes as well as other online applications.

Conclusion

As you probably learned with this blog post, one of the powers of structWSF is that it creates networks of structured content nodes that can be accessed by any thing, from anywhere, publicly or privately.

As you noticed, all this stuff is not only about integrating any kind of data, but also to publish it in a flexible way.