There are multiple ways to represent the World we live in. Someone will think about something in a way, where someone else next to him will think about the same thing in another way. They will think about it in different ways: different characteristics, different ways to interact with it, different ways to use it, different ways to think about its composition, its relations with other things, and so on.

What is nice is that probably all of these different ways to think about this thing are good: after all, there are many ways to think about the same thing. It is this characteristic of thinking about things in different ways that leads to innovation.

But innovation is also not a game where anything goes. Things that work in the real world and in real ways need to adhere to certain rules, concepts, principles and theories. Continued innovation requires working within these coherent frameworks of natural relationships and order.

So, while a beautiful thing is that we can create new frameworks to think about things differently, not all of those frameworks work as well as others or make sense.

While it is conceivable that one could suppose any new framework or to think about things differently, frameworks that are actually useful should, among other things:

- Make sure the development of innovations within the framework is coherent

- Make sure the development of innovations within the framework is in context

- Help coordinate the development of projects and the cooperation of agents that work on these projects in order to achieve (1) and (2).

What seems clear to me is that the lack of any of (1), (2) or (3) makes innovations difficult and/or less powerful and less useful.

Why Would the Development Of Ontologies be Different?

The Semantic Web is often seen as a place where people describe things in multiple ways and where these things are more or less magically related together. For example, if you can’t properly describe something, you only have to create a new ontology, or to extend an existing one, and to publish it, et voilà!

The more I work in this field, the less I believe in this.

Remember my first point? People tend to think about things in different ways. The same logic applies to the development of ontologies (particularly in the development of ontologies!). Two ontologies, intended to describe the same things, can describe them in totally different ways. So, while some of the magic is that both ontologies can perfectly describe these things but only in different ways, there are other aspects that are not magical at all.

The problem here is to have at least one framework that helps people to develop ontologies such that the:

- Developed ontologies remain coherent

- Developed ontologies are in context

- Coordination of the development of ontologies and the cooperation of the agents working on these ontologies projects is effective to achieve goals (1) and (2).

This construct looks familiar, doesn’t it?

What I am proposing here is to use UMBEL as a coherent framework for ontology development. I am not saying that other frameworks can not play a guiding role in ontology development. But I am saying two things. First, some form of reference framework is necessary. And, second, truly useful frameworks must also be consistent and coherent.

What I am stressing here is the importance of conceptual frameworks to develop ontologies that can be used by people, companies and systems to properly and efficiently exchange data; and at some level, to reason over this data, too.

I think that the only way to do this in an efficient way is by grounding ontologies in such conceptual frameworks.

The ultimate goal is to make data exchange and data reasoning effective to people, organizations and systems that consume this sea of data. And I believe that it is not possible to achieve without grounding these efforts in a coherent, conceptual framework.

An Example at Work

Nothing is better than an example to shows the potential of UMBEL as a coherent framework to develop, and cross-link, ontologies.

Let’s take the Bibliographic Ontology as an example, which we just cross-linked to UMBEL in yesterday’s version 071 release. (Among a dozen other key ontologies; the list is getting pretty cool!)

The goal is to link BIBO classes to UMBEL subject concepts. The linkage is done using three properties: owl:equivalentClass, rdfs:subClassOf and umbel:isAligned.

But firstly, what is the goal here? We try to do two things when linking such ontologies to the UMBEL framework:

- To make sure the ontology (BIBO) is coherent and consistent with other existing ontologies that are linked to the framework (other such ontologies could be FOAF, SIOC, etc.)

- To make sure that the design choices of the developed ontology are consistent with the design choices of the framework, and the other ontologies that are linked to that framework.

Both points try to help achieve a grander vision: trying to make the semantic Web a little bit more coherent and easy to use and understand.

The BIBO Linkage

This figure shows how BIBO classes have been linked to UMBEL subject concepts in a set-like schema (click to enlarge the schema):

This schema shows what set belongs to what other set. That way, we can quickly notice that bibo:Patent is equivalent to umbel:Patent. We can also see that both classes belongs to (sub-class-of) bibo:Document, umbel:PropositionalConceptualWork and umbel:ConceptualWork, etc.

We have to keep one thing in mind that we made clear in the UMBEL technical documentation: UMBEL has its own view of the World. UMBEL’s subject concept structure is its view of the World. So these linkages are consistent within the UMBEL framework. Now, let’s continue.

The Context

Remember the three points above? What we have done here is to put BIBO in context. The context is created by the UMBEL conceptual framework. Once this is done, we can check for the coherence between BIBO, UMBEL and all the other ontologies that are linked to the framework.

The figure below shows the context created by UMBEL for BIBO, FOAF and SIOC (click to enlarge the schema):

Considering the current description of these three ontologies, we know that bibo:Document is equivalent to foaf:Document. But there exists no relationship between these two classes and sioc:Item and sioc:Post.

Intuitively we know that there are some relationships between all these classes (at least based on their label). We also have to keep in mind that it is not because a description is not defined (in RDF) that this description doesn’t exist (this is the open world assumption).

That being said, the figure above shows how UMBEL can help us to find such “non-described” relationship between classes of different ontologies. By contextualizing these three ontologies we now find that all these classes are sub-classes of umbel:ConceptualWork. We also know that some sioc:Post belongs to umbel:PropositionalConceptualWork (things written), just like some bibo:Document and foaf:Document stuff.

This means that this linkage — this contextualization — of external ontologies now gives us a common ground to play with: umbel:ConceptualWork. By querying this subject concept we can come up with a full range of related things: BIBO, SIOC and FOAF stuff.

For example, take a look at the section “Narrower External Classes” of the umbel:ConceptualWork detailed report and extend the list of external classes (click on the ‘All Classes . . .‘ link). All these things are conceptual works. This fact is explicated by UMBEL even if no relations, or a small number, is described in these ontologies, related to the other ontologies. Also take a look a the list for umbel:PropositionalConceptualWork.

This also shows the coherence of the design of each ontology.

The Coherence

So, once we have the context in place, we are on our way to achieve coherence. UMBEL is 100% based on OpenCyc and Cyc, which are internally consistent and coherent within themselves. We thus use these coherent frameworks to make the mappings to external ontologies coherent, too.

The equation is simple:

“a coherent framework” + “ontologies contextualized by this framework” = “more coherent ontologies”

This context and this coherence helps us to develop ontologies in two ways:

- It helps us to make sure the design of an ontology is good

- It helps us to make sure the designed ontology is coherent with other existing external ontologies

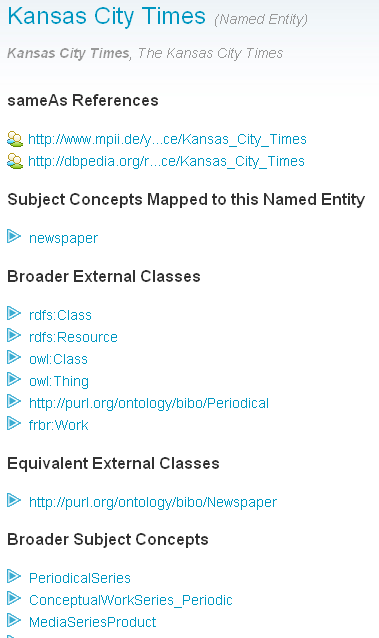

For example, when I linked BIBO classes to UMBEL subject concept classes, I found that a bibo:Series was a sub-class of umbel:ConceptualWorkSeries. Then I found that bibo:Periodical was the same thing as a umbel:PeriodicalSeries. However I had an issue: a bibo:Series was a sub-class of bibo:Collection and bibo:Periodical was also a sub-class-of bibo:Collection. Then I found that umbel:PeriodicalSeries was a sub-class of umbel:ConceptualWorkSeries. Then the question arose: why bibo:Periodical is not a sub-class of bibo:Series instead of bibo:Collection? This is what I will propose for the next iteration of BIBO.

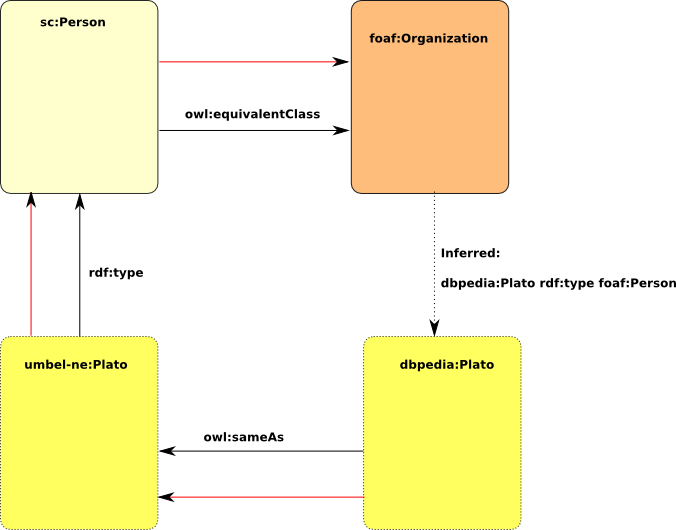

Now, what about this helping to increase the coherence between external ontologies?

One good example I have is related to SIOC and FOAF. When I linked SIOC to UMBEL, Kingsley asked me why I didn’t link sioc:Item. My answer was simple: I can‘t do this since if I make this linkage, the coherence of UMBEL will be disturbed. The problem was that sioc:Item was a sub-class-of foaf:Document. But considering sioc:Item‘s definition, and foaf:Document‘s definition and linkage to UMBEL, by making the linkage of sioc:Item to UMBEL would create some incoherence in the framework because of its relationship with foaf:Document.

From this discussion with Kingsley, this thread appeared on the SIOC mailing list, and the link from sioc:Item to foaf:Document has been removed.

These are the two general cases where UMBEL, as a coherent framework, can help the development of ontologies.

So, by achieving points (1) and (2), we are on the way to achieve point (3): the coordination of the development of ontologies and the cooperation of the agents working on these ontologies projects is effective to achieve goals (1) and (2).

The Final Mapped Relations

So, after application of this process and thinking, here are the UMBEL-BIBO mappings:

You can look at Appendix A to the UMBEL technical document (PDF or online); additionally you will see similar mappings for the existing dozen or so ontologies presently mapped to UMBEL. In combination, these give us the ability to ‘Explode the Domain‘!

Descriptive Subject Concepts: Icing on the Cake

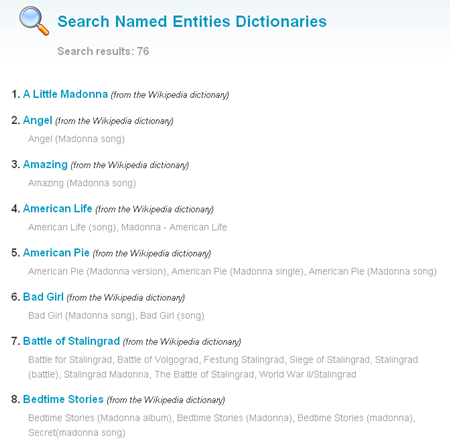

All of the description above relates to the mapping between the BIBO and UMBEL ontologies (and therefore other external ones). But, of course, we also now have the full scope of UMBEL subject concepts that we can also now apply to describe what the actual BIBO citations are about.

So, while we have structural ontology relationships that can be leveraged, we also now have a common vocabulary to describe the subject matter of what these citations are about. Use of these UMBEL subject concepts now allow us to cluster and retrieve citations by subject matter.

In this manner, UMBEL becomes a consistent tagging vocabulary for describing what citations and references are about. Want everything about weaving or galaxies or opera or anything, for example? Simply characterize your citations by appropriate UMBEL subjects and then use them as part of your retrieval filters.

This makes clear that UMBEL is some kind of Hydra: it can be used as a conceptual framework to help make ontologies (vocabularies) coherent and consistent, and at the same time, it can act as a conceptual description framework that describes the “matter” of things. This means that a subject concept can describe the “nature” of a thing and the “matter” of another thing at the same time.

Conclusion

UMBEL is becoming a wonderful tool that can be used in many ways. It is a vocabulary that is instantiated in a subject concept structure. It can be used not only to categorize things and to help find things, but also to define things, and to develop ontologies that define other things. We are on our way to achieve these three goals:

- Develop ontologies that are in context

- Develop ontologies that remain coherent

- Coordinate the development of ontologies and the cooperation of the agents working on these ontologies projects sufficient to achieve goals (1) and (2).

As usual, I’d like to thank my UMBEL co-editor and colleague, Mike Bergman, for his discussions and assistance on this material.

I am pleased to announce that we resumed our work with UMBEL. We just released the version v0.72, which is based on the OpenCyc version 2009-01-31. This new version is intermediary and has been created mostly to check the evolution of OpenCyc vis-à-vis UMBEL. Within the next month or so, we will release a new version (v.080), which will introduce a major new concept that should help systems and users manipulating the entire UMBEL Subject Concepts structure.

I am pleased to announce that we resumed our work with UMBEL. We just released the version v0.72, which is based on the OpenCyc version 2009-01-31. This new version is intermediary and has been created mostly to check the evolution of OpenCyc vis-à-vis UMBEL. Within the next month or so, we will release a new version (v.080), which will introduce a major new concept that should help systems and users manipulating the entire UMBEL Subject Concepts structure.