A couple of challenges I have found with DBpedia is that it is hard for a system to interact with the dataset and it is hard to figure out how to interpret information instantiated in it. It is hard to know what properties are used to describe individuals; and hard to know what the classes refer to. It is also hard for standalone and agent software to understand the nature of the individuals that are instantiated by DBpedia because the classes they belong to are generally unknown or poorly defined.

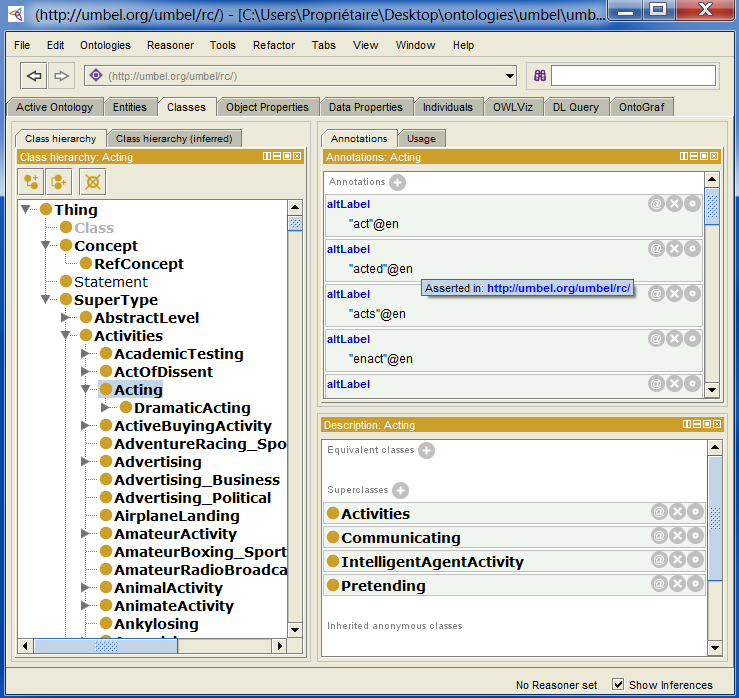

In the following blog post I suggest to use a method known as “exploding the domain” to try to overcome these difficulties of using and understanding DBpedia. This adds still further usefulness to DBpedia’s considerable value. This demonstration is based on the UMBEL subject concept structure.

As I will demonstrate below, this method consists of contextualizing classes in a coherent framework to explode their domains. By exploding the domain of a class, we link it to other classes that are defined by external ontologies. By exploding the domain of a class by linking it to externally defined classes, we also help standalone and agent software to understand the meaning for that class (at least if they understand the meaning of the classes that have been linked to it). Note that we are able to explode the domains by linking classes using only three properties: rdfs:subClassOf, owl:equivalentClass and umbel:isAligned.

First of all, let me give some background information about how DBpedia individuals and UMBEL named entities have been created, and how both datasets have been linked together.

How DBpedia individuals are instantiated

DBpedia is a dataset that is based on the well known Wikipedia encyclopedia. Basically DBpedia creates one individual for each Wikipedia page. Most of the individuals that are instantiated in this way are what we call a “named entity” in UMBEL’s parlance.

But to be instantiated, an individual has to belong to a class. DBpedia chooses to use Yago‘s classification system (that is based on WordNet) to instantiate those DBpedia individuals. This means that all DBpedia individuals belong to at least (theoretically) one Yago class. This means that all DBpedia individuals are instances of Yago classes (and in some rarer cases, they are also instances of classes defined in external ontologies).

How UMBEL named entities have been created

For its part, UMBEL’s named entities dictionaries come from different data sources. Currently, most all public UMBEL named entities also come from Yago (example: Aristotle), but many also come from the DBTune dataset (example: Pete Baron) or others. (UMBEL’s design allows more named entities to be plugged into the system as additional dictionaries at will.)

However, unlike DBpedia, we do not use Yago’s classification system to instantiate these named entities. And unlike Yago, we do not use the WordNet classes to instantiate the named entities either.

The current UMBEL subject concept structure is based on OpenCyc. This means that the relations between the classes that instantiate the UMBEL named entities come from the Cyc knowledge base.

So while we use Yago’s named entities (from Wikipedia) as a starting basis, we instantiate them using the UMBEL subject concept classes instead of the WordNet classes. So, basically, we have switched the WordNet conceptual framework for the UMBEL (or OpenCyc) one.

But, how did we create these UMBEL named entities, instantiated using UMBEL subject concept classes and based on Yago? Here is the linkage path:

Yago classes –> WordNet synsets <– Cyc collections <– OpenCyc classes <– UMBEL subject concept classes

Et voilà !

How UMBEL named entities are linked to DBpedia individuals

OK, so now how do we link UMBEL named entities to DBpedia individuals? It is simple. Remember that DBpedia individuals have been created from Wikipedia pages. Also remember that Yago individuals come from the same Wikipedia pages. We can then make the link between the individuals from DBpedia and the individuals from Yago based on Wikipedia URLs.

Exactly the same logic applies for linking DBpedia individuals to UMBEL named entities.

The end result of this linkage is that we have UMBEL named entities that are the same as DBpedia individuals. The difference is that the UMBEL named entities are now instances of UMBEL subject concepts: a totally different conceptual structure.

Remember that these named entities are contextualized in a coherent conceptual framework. And this characteristic means a lot for what is yet to come.

Web services to search and visualize these named entities

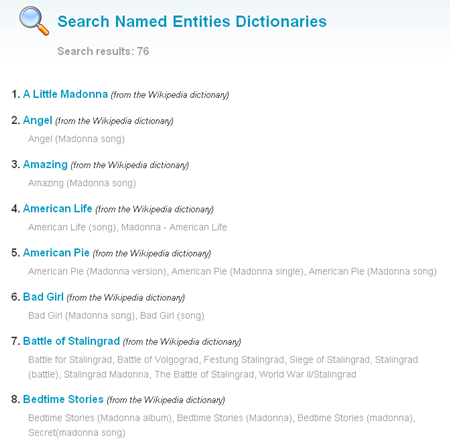

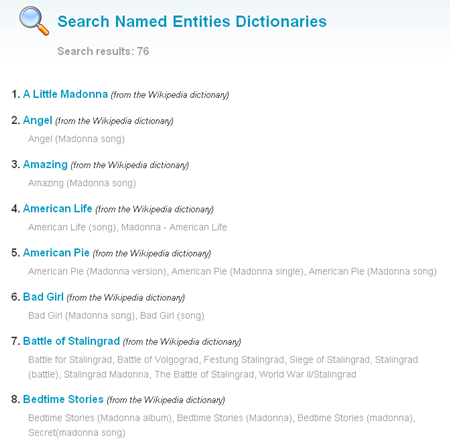

We created two new web services on the UMBEL web services home page (the user interface to these web services; the endpoints will be released later) to help people interact with these named entities:

- The “Search Named Entities Dictionaries” web service

- The “Named Entity Detailed Report” web service

The first web service lets you search amongst all publicly available UMBEL named entities dictionaries.

The second web service lets you visualize detailed information about any named entity.

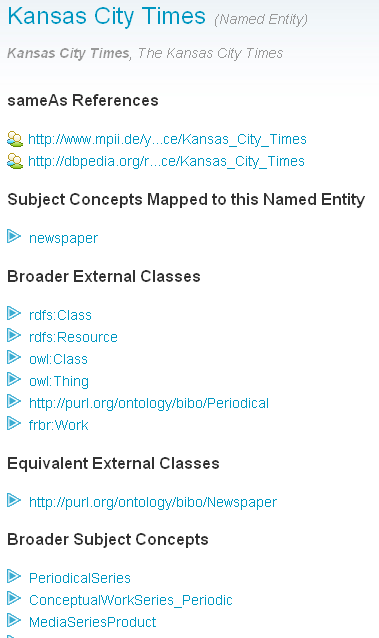

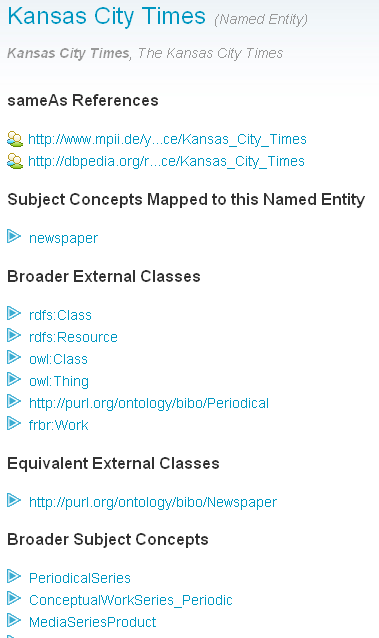

This information page shows you the full scope of information about a named entity: which class it belongs to (subject concept classes as well as external classes); which other individuals, from other datasets, are identical to them; examples of web services that get queried with information about this named entity; etc.

Exploding the domain of Plato

Now that this background information has been established, let’s take a look at what is happening when we link DBpedia individuals to UMBEL named entities: how that actually works to explode the domain.

Let’s take the example of dbpedia:Plato. This individual is currently defined in DBpedia as:

- yago:AncientGreekPhysicists

- yago:PhilosophersOfLanguage

- yago:PhilosophersOfLaw

- yago:PoliticalPhilosophers

- yago:AncientGreekVegetarians

- yago:AcademicPhilosophers

- yago:Philosopher110423589

Fine, but what does this mean? What if my system doesn’t know any of these classes? We, as humans, know that Plato is a person, a human being. But it is totally another story for a software agent.

What we want to do here is to explode Plato’s domain to try to find a meaning that my software system can understand.

In UMBEL, the “Plato” named entity is defined as an umbel:Person and an umbel:Intellectual. If you take a look at the detailed report for these two subject concepts, you will be able to see in the section “Broader Subject Concepts” the super-classes that Plato belongs to. So we know that Plato is a social being, a homo sapiens, etc. This is basically what happens with Yago too, except that the conceptual structure (the way to describe the entity) differs.

However one thing that is happening is that we exploded Plato’s domain with classes defined in external ontologies. As you can notice in the sections “Broader External Classes” and “Equivalent External Classes”, Plato is also a: foaf:Person, a foaf:Agent and a cyc:Person.

This means that if my software agent doesn’t know what a “yago:Person100007846” means; it alternatively may know what a foaf:Person or a foaf:Agent means. And if it knows what it means, then it will be able to properly manipulate it: to display it in a special way; to refer to it as a person; so to do whatever it can with information about a “person”.

This exploding the domain works because these external ontologies classes have been referentially linked to a coherent conceptual structure.

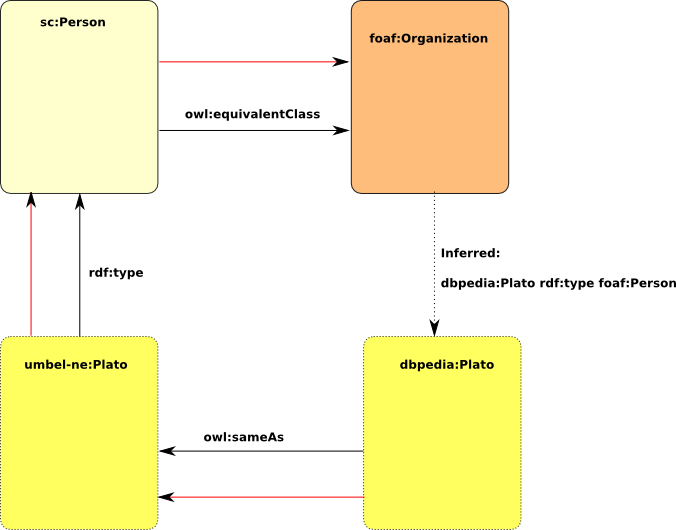

The inference path

Let’s take a look at the fundamental reasons why the scenario above works.

First, you, and your system, have to trust the UMBEL named entities dictionaries and the UMBEL subject concept structure to perform the inference that I will explain below. If you and your system trust these linkage assertions, then you will be able to act according to the knowledge that has been inferred.

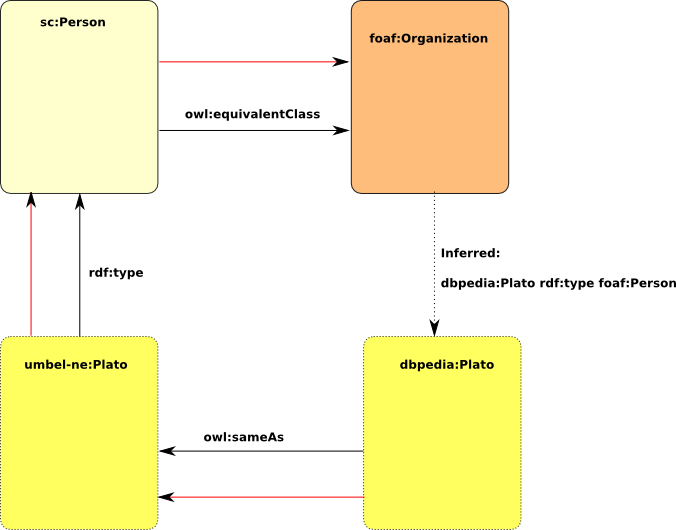

DBpedia individuals are linked to UMBEL named entities using the owl:sameAs property. This means that DBpedia individual A is identical (same semantic meaning) as the UMBEL named entity B. They both refer to the same individual.

This means that if B is defined as being of rdf:type sc:Person (“sc” stands for Subject Concept), then we can infer that A is defined as being of rdf:type sc:Person too.

If sc:Person is owl:equivalentClass with foaf:Person, we can infer that umbel:B is a foaf:Person, so that dbpedia:A is a foaf:Person too!

We can see similar examples for exploding the domains:

Exploring ConceptualWorks, PeriodicalSeries and NewspaperSeries

In my “UMBEL as a Coherent Framework to Support Ontology Development” blog post from last week, I showed how UMBEL subject concepts acted to create context for linked classes defined in external ontologies. Since DBpedia individuals are instances of classes, and that some of these classes are linked to UMBEL, these subject concept classes also give context to those individuals!

As some examples, go ahead and take a look at the “Named Entities for …” section of these detailed report pages:

The partial list of named entities that are returned by the detailed report viewer shows named entities that mainly come form Wikipedia (so that have links to DBpedia). These subject concepts gives a coherent context to those DBpedia individuals.

You should quickly notice, for example, that dbpedia:Kansas_City_Times is not only a sc:NewspaperSeries, a sc:PeriodicalSeries and a sc:ConceptualWork. You also notice that it is a frbr:Work, a bibo:Periodical and a bibo:Newspaper.

The context created by these UMBEL subject concepts gives not only new power to linked external classes, but also to their instances, such as these DBpedia individuals!

Conclusion

Contexts created by UMBEL subject concepts emerge by the power of linkage that exists between all the subject concepts, and the linkage between those subject concepts classes with classes defined in external ontologies. These contexts are consistent because of the coherence of the structure that is powered by OpenCyc (Cyc).

So far, most Linked Data has been about the “things” or named entities of the world, organized according to either Wikipedia categories or WordNet. These structures may have some internal structural consistency, but were never designed to play the role as a coherent reference framework. The coherence of UMBEL (based on the coherence of Cyc) is a powerful contextual lever for bringing order to this chaos.

Once information gets linked to a coherent framework such as UMBEL, things start to happen; powerful things. And, with each new linkage and relation to additional external ontologies, that power increases exponentially.

I wrote this blog post to show again the power of exploding the domain using DBpedia as an example, and how UMBEL can help to use and to leverage such big datasets.