My initial intuition is that I could serialize RDF data into Clojure code where the OWL semantic of the RDF data is embedded, in some ways, into that code. I want to test how the general saying of homoiconic languages: Data as Code. Code as Data, fits with RDF & OWL.

Another intuition I have is the concept of Portable Data: stateful RDF data which embed its own semantic and which doesn’t rely on external (mostly stateless since we can rarely rely on their stated versions) ontologies. My intuition is that it would be possible to serialize RDF data in such a way that it would be self-aware of its own semantic which means that it would know how it can be interpreted, how it can be used, and how it should be validated. The idea is to end-up with Portable Data snippets that could be exchanged between systems without requiring prior, or post, schemas (ontologies) to interpret that information. Then web service endpoints such as OSF, or any other kind of applications, could emit such Portable Data structures without requiring any subsequent ontologies analysis from their part.

However, before being able to implement and demonstrate these intuitions, the first step is to check what such a RDF serialization may looks like. This is the goal of this blog post.

Serializing RDF Data as Clojure Code

Where to start? There are probably multiple ways to do that. Do we want to do that using a map, a structmap, a records, or…? What I wanted to use (at least initially) is a basic data structure that would give me the flexibility I need to serialize RDF data. I wanted a core structure such that existing Clojure developers could easily manipulate it using the existing Clojure functions and techniques that they are used to use.

The collection I choose to start with is the map. This key/value pair structure is ideal for serializing RDF data. It looks like JSON code, but is even simpler since it doesn’t require commas nor colons in its syntax.

The crux of the map structure is that in a map, the keys can be: keywords, symbols, strings, characters, booleans and numbers. The only things it cannot be are regular expressions and the nilvalue. What should be stated here is that symbols can be a lot of different things. They are names for vars, functions, etc.

This opens a World of possibilities to serialize RDF data as Clojure code. In fact, the keys of the map can virtually be anything: and this is just too nice to be true!

What we will investigate in the remaining of this blog posts are different ways to serialize RDF data as Clojure code. These are the initial tests I did to test my intuitions. All of them works, but only the last one really opens-up a World of possibilities and that enables me to implement my early intuitions.

Quick Introduction to RDF Data

RDF is nothing else than a bunch of triples of the form:

<subject> <predicate> <object>

Where the <subject> is the thing (resource, record, entity, etc.) being described, where the <predicate> is the property (attribute, etc.) that describes the subject and where the <object> is the value of the predicate which can be a reference to another subject, a literal value, etc.

Each <subject> do have at least a type. A type is nothing else than a class of things which is defined in a RDFS schema or a OWL ontology.

Then if you wire these triples together, you get a directed graph which we often refer to as a datataset. It is as simple as that. However, I won’t state that RDF is necessarily simple, since its expressivity (a double-edged sword) can make things much more complex.

The semantic of the data lies into the <predicate> and the type. It is the predicate and the type that tell us how to interpret, and use, the data. It is what is used to validate the data for example. That is exactly where Clojure, and its map structure, can help us to create this kind of portable data.

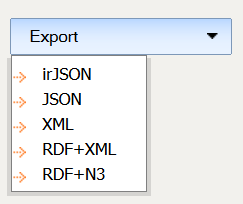

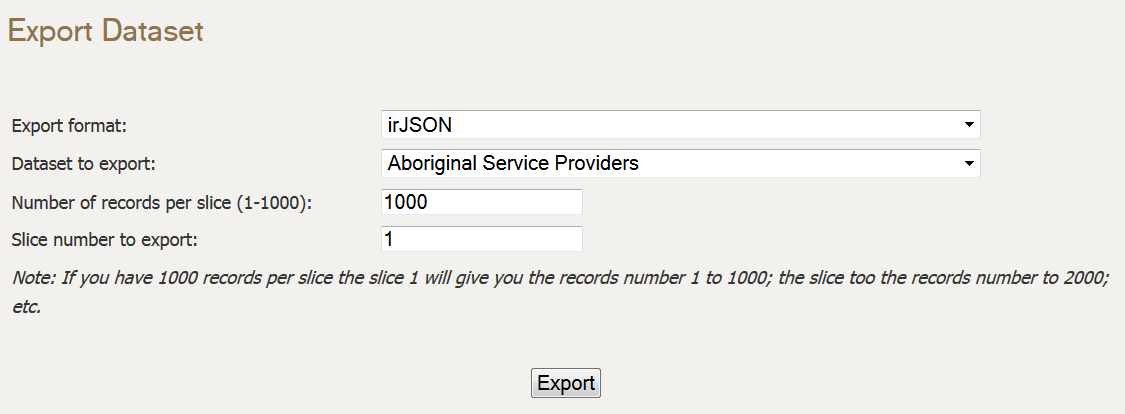

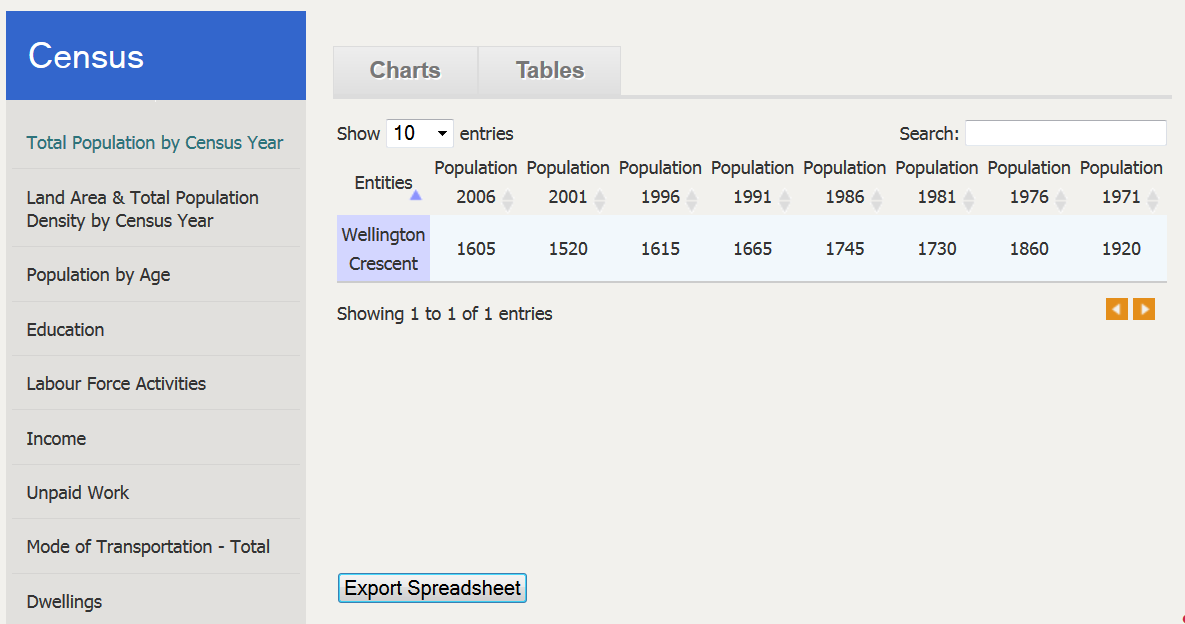

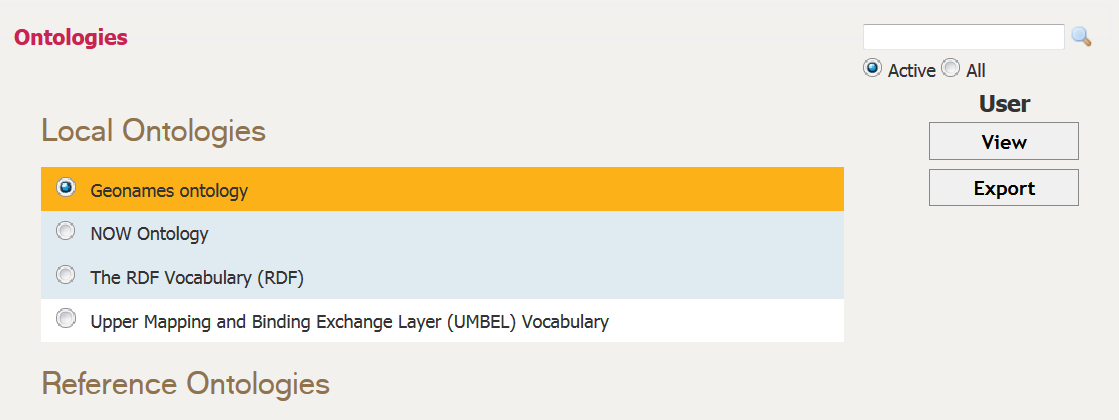

As you will see below, the serialization of RDF data as Clojure code looks like the structJSON RDF serialization format developed by Structured Dynamics and used at the core of the Open Semantic Framework. It is not a coincidence since that simple structure has been highly effective to serialize and transmit RDF information between OSF web services and other applications such as OSF for Drupal and other JavaScript applications.

Leveraging Serialization’s Hierarchy to Create Triples

Before jumping into Clojure, let’s take a quick look at a really simple structJSON record. What I want to show you is how triples can be extracted from such a data structure. It is the same principle that will be used to extract triples from the Clojure serialization:

[cc lang=’javascript’ line_numbers=’false’]

[raw] “subject”: [

{

“uri”: “http://dataset1.com/record-a/”,

“predicate”: [

{

“rdfs:type”: “http://umbel.org/umbel/rc/Person”

},

{

“iron:prefLabel”: “Bob”

},

{

“foaf:knows”: {

“uri”: “http://dataset2.com/record-b/”

}

}

}

][/raw]

[/cc]

What we leverage here to extract triples is the hierarchy nature of the serialization. Here the "subject" key introduce an array of objects. Each object has a "uri" key which is the identifier (<subject> of a triple). Then the "predicate" key introduces a series of attributes for that record. Each element of the array is a predicate with the key is the prefixed version of the RDF <predicate>. Then you have a value for each of these predicate keys. If you read the documentation, you will see that you can get to another level called the reification of that triple (don’t confuse with Clojure’s reification mechanism) that is used to define extra information related to a triple statement. That structJSON code would produce the following ntriples:

[cc lang=’text’ line_numbers=’false’]

[raw]http://dataset1.com/record-a/ rdfs:type http://umbel.org/umbel/rc/Person .

http://dataset1.com/record-a/ iron:prefLabel “Bob” .

http://dataset1.com/record-a/ foaf:knows http://dataset2.com/record-b/ .[/raw]

[/cc]

Serializing RDF using Maps and Keywords Keys

The most intuitive way to serialize RDF data as Clojure maps would be to create a map where all the keys are keywords. An initial test would be:

[cc lang=’lisp’ line_numbers=’false’]

[raw](def resource {:uri “http://foo.com/1”

:rdf/type [foaf/Person owl/Thing]

:iron/prefLabel {:value “Fred”

:lang nil

:datatype xsd/string}

:foaf/knows [{:uri “http://foo.com/2”

:rei [{:iron/prefLabel [{:value “Bob”

:lang “en”}

{:value “Robert”

:lang “fr”}]}]}

{:uri “http://foo.com/3”

:rei [:iron/prefLabel “Mike”]}]})[/raw]

[/cc]

What we did here is to define a map with the symbol resource. This map is composed of a series of keys and values where the keys are keywords, and were the values can be strings, vectors or maps. The basic serialization rules are:

- Each map has a

:urikey that define the URI of the resource being described - Each key is a namespaced key where the root of the namespace is the prefix of the ontology where the

<predicate>ortypeis defined - If the predicate is a

owl:DatatypeProperty, then its value can be:- A

vectorwith one or multiplemapand/orstring - A

mapwhich can have four keys::valuewhich specify the actual string value:langwhich specify the language of that string:datatypewhich specify the datatype of the string:reiwhich specify reification statements for the triple

- A

stringwhich is the actual value without any additional information about that Literal

- A

- If the predicate is a

owl:ObjectProperty, then its value can be:- A

vectorwith one or multiplemap,stringand/orsymbol - A

mapwhich can have two keys::uriwhich specify the actual URI of the referenced resource:reiwhich specify reification statements for the triple

- A

stringwhich represent the URI of the resource to be referenced - A

symbolwhich represents the URIstringof the resource to be referenced

- A

Namespacing Keywords

One of the important notion is that the keywords used as map keys are namespaced. This means that they are defined, and live, in their own namespace. This is an essential requirement for a RDF serialization since we re-use multiple ontologies that may share the same name for some of the predicates and that we don’t want these keywords to clash. That is why that by convention we do create each of these keywords in their respective ontology’s namespace. An ontology namespace is defined as the prefix used to refer to the ontology (for example, the Bibliographic Ontology‘s prefix is bibo, so :bibo/shortTitle would be the key referring to the property http://purl.org/ontology/bibo/shortTitle).

Usage

Now let see how we can work with such a structure in Clojure:

[cc lang=’lisp’ line_numbers=’false’]

[raw];; Return the values of the rdf/type property

(:rdf/type resource)

(resource :rdf/type)

(get resource :rdf/type)

;; Return all the properties that describes the resource

(keys resource)

;; Get the URI of the first person known by Fred

(:uri (first (:foaf/knows resource)))

;; Get the French name of the first person known by Fred

(:value (second (:iron/prefLabel (first (:rei (first (:foaf/knows resource)))))))

;; Update the name of Fred to Frederick

(update-in resource [:iron/prefLabel :value] str “erick”)

;; Output the difference betweeen the original resource and the updated one

(diff resource (update-in resource [iron/prefLabel value] str “erick”))

;; Find the value of a key

(find resource iron/prefLabel)

;; Select values of multiple keys

(select-keys resource [iron/prefLabel foaf/knows])

;; Merge a resource into another resource. The URI and properties of the later resource are kept into the merged resource

(def res-1 {uri “http://foo.com/datasets/test/1”

rdf/type owl/Thing

iron/prefLabel “Preferred Label”})

(def res-2 {uri “http://foo.com/datasets/test/2”

rdf/type owl/Thing

iron/altLabel “Alternative Label”})

(merge res-1 res-2)[/raw]

[/cc]

That is all good and easy. We use Clojure’s core functions and mechanism to easily manipulate RDF data into our application.

However, is this implementing the intuitions I started with? Definitely not. This is more like a conventional serialization format for RDF just like structJSON. The thing here is that if we want to do any kind of validation on this data, if we want the data to be self-aware of its own semantic, then it is not possible when keys are keywords. We would need external mechanisms to create that map structure, then to check what it refers to (the properties, the types, etc.). And then we would have to look them up into their respective ontologies and finally we would have to validate the data structure according to what these ontologies are saying by re-processing that map structure.

This is not quite what I had in mind and what my intuition was telling me.

Serializing RDF using Maps and Symbol Keys

Let push this idea further. What if the keys of the map that represent our RDF data are not keywords, but symbols? Symbols in Clojure name things like vars, functions, etc. Initially, let’s use symbols that refers to the URI (string) of the <predicate> and the types.

The serialization would look like:

[cc lang=’lisp’ line_numbers=’false’]

[raw](def resource {uri “http://foo.com/1”

rdf/type [foaf/Person owl/Thing]

iron/prefLabel {value “Fred”

datatype xsd/string}

foaf/knows [{uri “http://foo.com/2”

rei [{iron/prefLabel [{value “Bob”

lang “en”}

{value “Robert”

lang “fr”}]}]}

{uri “http://foo.com/3”

rei [iron/prefLabel “Mike”]}]})[/raw]

[/cc]

Now our resource is defined with the same structure, except that the keys are actual symbols. In this second iteration, we will consider that the symbols we defined here are representing a string which is the URI of the predicates or the types.

The real advantage of using symbol over keywords for what we are doing with these RDF serialization is that a symbol can:

- Have a

docstring - Have

meta-data - The evaluation of the symbol will results into getting the actual full URI of the predicates/types

These are obvious enhancements over using keywords. First, by being able to define docstrings, which means that we will be able to document these properties and types such that Clojure IDEs can display the documentation of these symbols while you are writing/editing RDF data in Clojure.

Clojure’s meta-data system will be highly leveraged in the final candidate serialization format that I will cover in another blog post, so I won’t discuss it further for the moment.

Finally, once we evaluate such a map, we get the map along with all the evaluated properties/types which refers to their full URI. The evaluation of such as structure [(eval resource)] looks like:

[cc lang=’lisp’ line_numbers=’false’]

[raw]{uri “http://foo.com/1”, “http://www.w3.org/1999/02/22-rdf-syntax-ns#type” [“http://xmlns.com/foaf/0.1/Person” “http://www.w3.org/2002/07/owl#Thing”], “http://purl.org/ontology/iron#prefLabel” {value “Fred”, datatype “http://www.w3.org/2001/XMLSchema#string”}, “http://xmlns.com/foaf/0.1/knows” [{uri “http://foo.com/2”, rei [{“http://purl.org/ontology/iron#prefLabel” [{value “Bob”, lang “en”} {value “Robert”, lang “fr”}]}]} {uri “http://foo.com/3”, rei [“http://purl.org/ontology/iron#prefLabel” “Mike”]}]}[/raw]

[/cc]

As you can see, we can get the full description of this resource with the full expansion of the URIs referenced by the symbols.

The same parsing rules defined in the previous section applies for this new format that uses symbols instead of keys. The same comments regarding namespaces applies here too.

The usage is nearly identical except that a symbol is not a function like the keys which means that you cannot get the value of a key like this when the key is a symbol:

[cc lang=’lisp’ line_numbers=’false’]

[raw](rdf/type resource)[/raw]

[/cc]

What you have to do is to access that using one of the following two methods:

[cc lang=’lisp’ line_numbers=’false’]

[raw](resource rdf/type)

(get resource rdf/type)[/raw]

[/cc]

Even if we improved upon using keywords as keys for the map, we still don’t have any kind of embedded semantic or auto-validation capabilities as my intuition was telling me. It remains the same kind of structure without much significant improvements.

Serializing RDF using Maps and Symbol Keys Referring to Functions

Let’s change our mind, and let evolve this idea of symbols: what if the symbols we define in the map are actually functions instead of strings?

What!?!?

A function could be the key of a map in Clojure?

Well not directly, but yes. In Clojure symbols are naming different things such as functions. This is quite an important feature of Clojure: it makes the distinction between how things are named, and these actual things.

This means that what is really used as keys in our map structure is a symbol. However, that symbol happen to refer to a function. So it is not the function itself that is used as a key, but the actual thing that refers to it which is the symbol.

However, the result is the same: if we evaluate the map, we will get a series of symbols that evaluates to functions. That is exactly what we were looking for: that little gem, hanging around, just waiting to be picked-up.

This opens an overwhelming number of possibilities. This means that we have a data structure that can be evaluated to a series of functions and that can be executed. That is exactly what should enable us to define that Portable [RDF] Data serialization format.

That means that we won’t only be able to define RDF triples as Clojure code, but that we could even execute that Clojure code to do different things with the data, such as auto-validating itself, etc.

Finally, what if we consider RDF predicates as Clojure functions? Predicates have all kind of properties and semantics. They can be specified to be used to describe only certain kind of resources, or to refer to specific type of values. Predicates can be symmetric, functional, transitive, etc. What if we simply implement these characterics as Clojure functions? This is what this whole thing is mean to be. When evaluating and “running” that RDF map structure, we would simply execute these functions that define the semantic and characteristics of these predicates. That is exactly where lies my intuitions: we would end-up with a RDF serialization format that “embed” it own semantic and that can be used to self-validate itself by executing the structure. That is what I would refer to as Portable Data: stateful data with embedded stateful semantic.

The initial version of this other revision of the RDF serialization as Clojure code will be outlined in the next blog post since its discussion warrant a full blog post in itself. However I think that you can start understanding where I am heading with these intuitions and why I am using Clojure to test them.

Once an initial version of this serialization will be outlined, we will see how it can be used, what are the benefits, how the idea of Portable Data could be leveraged, how it can help creating and managing data using traditional IDEs such as Emacs. Once the basis will be outlined, we will have all the leisure to explore the benefits of this concept.